In April, EEG Video hosted the first in our series of Zoom webinars to educate media professionals about our closed captioning innovations.

Closed Captioning Solutions for A/V, Live Events, and Online Communications • April 28, 2020

During this well-attended live online event, Bill McLaughlin, VP of Product Development for EEG, walked attendees through our solutions built for A/V, live events, and online communications. This essential webinar covered:

-

All about EEG’s closed captioning solutions for live events and more

-

How EEG’s products can be used for different captioning needs and workflows

-

The latest closed captioning advancements at EEG

-

A live Q&A session

Featured EEG solutions included:

Find out about upcoming EEG webinars here!

Transcript

Regina: Hi everyone, and thanks so much for joining us today on the webinar Closed Captioning Solutions for A/V, Live Events, and Online Communications. So glad you all could join us here today.

My name is Regina Vilenskaya and I'm the marketing content specialist here at EEG. I'll be the moderator today. I'll be joined by Bill McLaughlin, the VP of product development. Bill has led EEG webinars in the past and delivered talks about closed captioning and the A/V industry in general.

So during today's webinar, Bill will be covering who we are and what EEG does, the EEG solutions built for A/V, live events, and online communications, and the latest products and features. If you have any questions during today's webinar, you can enter those questions into the Q&A tool at the bottom of your window and at the end of the webinar, we will try to answer as many questions as possible. So that about covers it and I'm now going to welcome Bill to kick off the webinar Closed Captioning Solutions for A/V, Live Events, and Online Communications. Welcome, Bill!

Bill: Thank you. Great job, Regina.

Regina: Thank you.

Bill: Thank you. So, I hope that some of you came to last week's webinar out there for focusing on our broadcasting solutions and it was interesting enough that you came back again. That would be really great. Anybody specifically interested in the A/V side of the product, that's awesome, too.

Today's webinar is going to focus on more of our A/V, live events, and live meetings product line. So this is something that we've been doing a lot of new development in and seeing a lot of growth in. You know, originally EEG was primarily a company providing broadcasting solutions and services, and that's something that's really been evolving as, I think, you know, new economies of scale in video capture and video production and video streaming and being able to get that to a broad audience through social media.

The growth in video meetings, especially now in our current situation with so many people working from home, we’ve just had enormous interest in the past month and what we can do for webinars like this. You know, platforms like Zoom, as well as Facebook Live and YouTube videos, everything from trade show-style conferences to academic conferences and, you know, graduations. These are all sectors that we've been working in providing encoders on a rental basis, captioning services (both through human transcription partners and through Lexi automatic captioning) for many years, it's a time of a lot of change there.

Unfortunately, with some events in sections like sports, things are really slowing down but, you know, it's been very active in virtual meetings and technologies like that, and I think EEG right now really has a very good set of solutions in that area, so we've been very busy talking to a lot of customers, and I’d be - it’d be great to hear in the Q&A from anybody who has interesting applications, interesting recent stories, or just wants to learn more for the future.

So today we're going to primarily focus on three EEG products that are different from what we talked about in last week's webinar.

We’ll talk about Falcon, which is a software-based cloud-hosted closed caption encoder that primarily works with RTMP video and so it's for cloud-based direct-to-social-media (or other video platforms) type of streaming applications.

In the AI captioning space we will talk primarily about Lexi Local, and Lexi Local is a brand new product we're offering this spring. It brings the power of automatic captioning with Lexi into a on-premises package, where it doesn't require a cloud connection and it doesn't require internet or iCap. It basically is an appliance that provides a stream of audio text transcription and live closed captioning with most of the features that you'd expect from a cloud-based solution, but all local, so there's kind of full control over the data and it’s great for any type of application where it's hard to get an external internet drop.

Finally, especially for in-person live events as that starts to resume, we’ll be showing the AV610 product. That product debuted at NAB last year, and we have quite a few improvements on the AV610 and that's an open caption live display product that can take various sources of captioning and produce an attractive, configurable, customizable on-screen display for the closed caption data.

Falcon

So we'll begin with talking about Falcon which, again, is the cloud-based software caption encoder for adding live captions to streaming video, and you'll see it works with almost any streaming video platform: social media, live video applications, and even a lot of video conferencing applications.

Falcon is a cloud-hosted software-as-a-service system where it’s - you sign up on EEG Cloud and it's a monthly subscription, so there's nothing that you need to install or permanently purchase for use at the video capture site. It works with the type of streaming systems that are commonly going to be found in a typical setup for a digital classroom, a town hall, a church or other worship site, or even just a home streaming setup like you would use for a typical web meeting production.

And it's going to also be compatible with most of the way those types of videos are distributed, whether that’s streaming in a social media platform, going out on something like Livestream or UStream, or custom video applications that maybe use a server like Wowza to provide a little bit more of a custom video on your own website.

It could be subscription-based, could be - could be delivered free, but basically viewers are going to come to your website and see the video, and when everything's hooked up with Falcon and you have, you know, a player installed that supports closed captions, the end goal is the viewer will have that same experience that they would have on a typical television program with remote control, which is to be able to turn captions on and off, you know, at the viewers’ discretion, as they’re embedded in the feed.

Live Streaming Setup (without Falcon-Sourced Captions)

So, to look at how what the typical setup would be, we're going to start by imagining that you're using a - you know, a PC- or Mac-based software streaming encoder. Telestream Wirecast is one of the most popular ones. There is also an open-source version of OBS that’s very useful and common, and these programs will typically send an RTMP feed to a platform like YouTube, Facebook, or Twitch, and you'll go into your account on the platform and will be provided with an ingest URL where you send your RTMP stream. And at that point, the platform will take that one RTMP stream and is able to convert that into the different sizes and resolutions that are appropriate for all the different mobile devices and browsers, and maybe set-top applications that are generally supported.

So, you just uplink one RTMP stream and the rest of the conversions are generally taken care of. And as we'll see, that typically does include closed captions, so there would be no captions on the video at this stage; it’s just video and audio going to your platform.

Falcon Workflow

Falcon needs to add captions, so Falcon is going to go in the middle between the original video stream coming from, let’s say, Wirecast, and your platform–say, Facebook–and so, you'll see that in the middle of this diagram is Falcon, which ingests from a set of servers hosted by EEG at eegcloud.tv, and Falcon will take your ingest stream, so you go into the settings in Wirecast and instead of streaming to the Facebook URL directly, you stream to the Falcon URL, which you get from your Falcon account.

You then tell the Falcon account that where you want to forward the video, as it has added captions, is to Facebook, and so you’ll see that the video passes through Falcon with about a one-second additional delay. Meanwhile, the audio from your program will be sent securely over iCap to a transcription service that you contract with.

So built in on the system, you can use EEG's Lexi automatic captioning system for an additional hourly cost. You can also contract with any of the hundreds of providers in the United States and around the world that provide captions over iCap using various methods, often humans who will use a steno keyboard to create the captions. And those captions will be returned to the Falcon server through iCap, and Falcon will put them into the RTMP stream. The captions are embedded with the video data. There is some options about how that's done, but typically it's done using the 608 captioning standard, and they'll be passed onto the platform and the platform is able to take that video with the captions and it wraps the captions up in the appropriate formats for all the different players, so then any viewer that’s using a phone or browser player that can support closed caption will see the little CC button somewhere on their player. And when they activate captions, they’ll be able to see the text data.

And how that data is formatted and displayed exactly can often depend on which device or player is used, but typically it's gonna come out in a - in a fairly predictable way, and it's going to match what the - what's been encoded by the captioner. Here you see a screenshot of what it looks like when you're actually in Falcon on EEG Cloud.

So you have your RTMP ingest URL and stream key that are assigned specifically to your account, and you can regenerate this information as needed. But basically, you can also use it for as many events as you would like. So you'll set that in the streaming encoder and you'll see on the left panel, you have monitoring of the input video, you'll be able to see when your video comes into Falcon, and very shortly after you start broadcasting the video to Falcon, you should also see video in the output window.

When captioning begins, you'll see captions also go into the output window, and you should also very shortly see your platform light up that, essentially, if you're in something like YouTube’s producer window, you’ll see the video come in to YouTube as well and be able to monitor the captions from there. In the output destinations, you can actually use Falcon sort of conveniently as an RTMP splitter as well. You can send to multiple destinations and that can be any combination of, you know, the different popular social media platforms, as well as custom servers; any RTMP URL is okay.

You can also use RTMP over SSL, both at the input and at the output of Falcon. The default is that Falcon pushes RTMP to your URL, but you'll see you can also pull RTMP data from Falcon so, effectively, Falcon can act as the server. If you have an application that needs to pull the video data from an external server we can do that, too. And you will assign Falcon at a given time to a certain caption agency or service and provide a unique Access Code. That caption agency will receive notification that they’ve had an event shared with them and, you know, you need to - generally with a human caption agency you would need to schedule the event in advance and, you know, have a basic contract. But essentially the agency will use that Access Code to reach your event and to send the captions.

Using HTTP Falcon to Send Captions

If you’re using Lexi, that’s the Access Code which you would plug into the Lexi engine. Now some types of platforms actually have a separate HTTP uplink for captions. Zoom is one of these, and so instead of sending an RTMP stream through Falcon and having captions added to that, what you do with Zoom is you have a separate HTTP link that the caption text only is sent to, and if you're the presenter or organizer for a meeting, you can get to that by, you know, going into the CC display at the bottom of the window and asking for that URL. And it's unique, typically, for each Zoom meeting.

As a viewer, you'll just see the CC button when captions are present and you'll be able to view them. So, this meeting is actually being captioned if anybody has noticed–using Lexi and using Falcon, using the HTTP link for Zoom. So, there's a couple of other platforms that support that style of captions, and that’s supported by Falcon, also.

Using the HD492 iCap Encoder to Stream Content

Usually when you're using any kind of video conferencing or software streaming encoder, Falcon is going to be the best approach because it doesn't require additional equipment for you to install, and it doesn't really require cabling, and it's compatible with a pure software workflow, so it's a light footprint.

But in some situations, even though your final target might be social media or an online video platform, you might have some video production equipment that's using SDI instead of being all in software. And in that case, another thing to consider is using the HD492 iCap encoder for captioning, which is a physical SDI video caption encoder.

It puts the captions in the SDI VANC output. And typical hardware streaming encoders that take an SDI input, like a Teradek Cube or Amazon Elemental live system or, you know, there’s plenty of other brands, typically they will take the SDI VANC captions and be able to put those in an RTMP or, you know, an HLS, HTTP live streaming output, and you'll have the captions preserve the workflow that way.

So if you have any systems on-prem that are being used to produce SDI video or you're looking to record an SDI or have other equipment, like a link to local public access cable, in a church or anything like that, that could need SDI captions, and in that case, the EEG HD492 might be a good approach. For the viewer that’s watching on the web, the result is gonna be roughly the same whether you use this SDI encoder or whether you used Falcon.

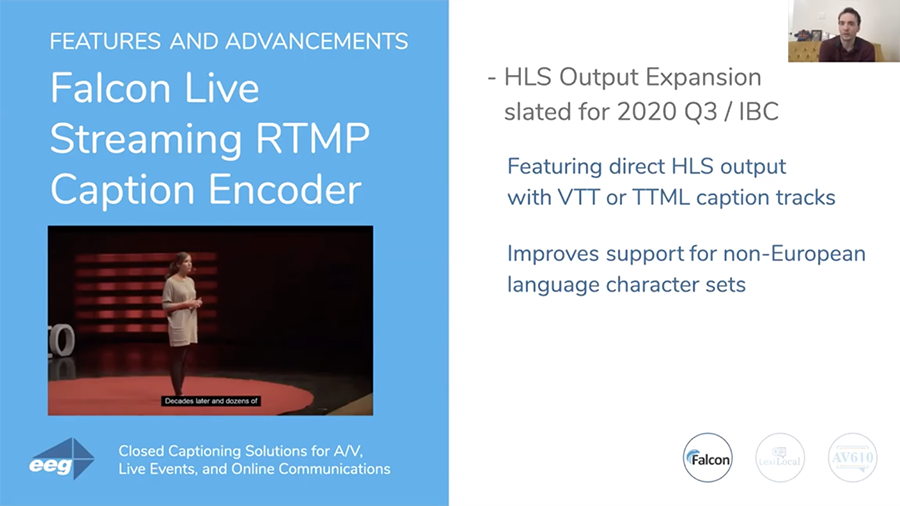

Falcon Features and Advancements

Falcon mostly currently streams in RTMP. There is a HLS output that's available from Falcon that you can pull down a couple of clients per stream. The current Falcon HLS output still uses embedded 608 captions and one of the limitations that customers will find on that is that 608 only supports a pretty limited number of languages because it only supports languages that use, you know, an extended Latin character set–so English, Spanish, French, Italian, German, a few other European languages.

To support more languages, we are coming out this summer with an extended version of the HLS output stream from Falcon that's going to contain VTT text tracks and, when you use this feature, you'll actually be able to support captions to a player that supports VTT text chunks in any language that you can pass into the Falcon encoder in UTF-8 and that you have a font for as part of the player set and as part of the viewer computer.

So this is gonna support a couple of different - you know, common languages include Chinese, Japanese, Korean Hindi, Cyrillic languages, Arabic script languages, and this will be a big expansion for that, that we hope will really make streaming captions a little bit more of a internationalisable solution, especially in combination with the iCap Translate service, which is able to provide AI translation into additional languages from a single language of caption that matches the audio.

Lexi Automatic Captioning

So, after that discussion of Falcon, I'm gonna pivot a little to talk to you about caption delivery, specifically mostly automatic captioning. So, you know, for a lot of large streaming events where it's more on a broadcast scale, it's pretty split between use of automatic captioning and customers that will - will be using a third-party service to provide human captioning.

You know, especially in a difficult-to-transcribe video or, you know, a video, for example, with a, you know, a panel discussion with several different speakers, you often can get the best results by using a human captioner. But it's very difficult to scale that to communications videos that you might be doing a very large number of videos, often for a relatively small audience, and so for budgetary and logistical reasons, a lot of streaming and web meeting applications will be going with an automatic captioning solution.

EEG's Lexi solution is really a best-of-breed in automatic captioning. It has really very good accuracy, generally over 90% done with proper modeling and, you know, a relatively easy video environment. You can - you can do 95% a lot of the time. You have really quite low latency, and a lot of extra features like, you know, the custom vocabulary feature, which can be very important for a lot of presentation domains where there's technical topics and acronyms and, you know, company product names. It can be very important to use the Topic Models well, and that’s something that - that we can help with a lot on the support side as well.

Basically if you're using Falcon, it's really a one-click integration to also use Lexi. All you need to do is pass along the Access Code that you've created as part of your Falcon stream to the Lexi service and - and press Go and it captions.

Lexi Local

Our major release associated with this virtual NAB cycle for Lexi is a local version of the product. And Lexi Local runs on a rackmount server, and it actually, unlike the cloud Lexi, which has very, you know, smooth integration with cloud services like Falcon but runs remotely from your plant, Lexi Local actually will be placed into the video capture or video production center. And Lexi Local provides the captioning just directly in connection with an encoder that’s within the production center.

Lexi Local Workflow

So, where this really is most important is in cases where you have corporate meetings or, you know, things associated with government agencies, health–anything where there's a very strict data confidentiality mandate and you actually can't easily get approval to send the audio data out to, you know, human or automatic services on the cloud that aren't part of your organization because of data privacy concerns.

So Lexi Local goes directly in the facility and none of the data needs to leave to go to the cloud or the internet. EEG or any other provider isn’t going to have access to any of the audio on Lexi Local, you know, even in a technical support or, you know, any other theoretical debugging sense. It's all going to stay locally in your enterprise.

So, how does it work? Besides from that, really the same way. You can use the same on-prem encoding devices, which would mean any of our SDI equipment; Alta, which is our on-prem software encoder for MPEG transport streams and SMPTE 2110 video; or anything else that has an iCap connection capability, whether it's using an iCap proxy card or a third-party integration like Imagine’s Versio system. And you'll be able to use Lexi Local to write the captions and you'll get a captioned video output and, you know, critically your encoder has connected to its local Lexi Local server and not out to the cloud.

Everything that you need to operate a basic captioning system is included on this server, so you can connect multiple encoders to it. If you're needing to run more than one channel of live captioning, there are additional licensing costs to run additional channels simultaneously, but you don't need multiple pieces of hardware.

And you can then connect the system to custom model data which - which, again, is stored entirely locally, you can connect it to, you know, even things like dial-in cards for any cases where you are using external captioners. You can also use something like a - this is customer-supplied but you can use something like a customer-supplied VPN to connect a human captioner into the system as needed as well. So everything's on that box and everything is local.

Lexi vs Lexi Local

You pay for Lexi Local a fixed annual license cost for the number of simultaneous streams you need to run. So it's a very different model than the cloud-based Lexi, which is essentially pay-per-hour. Lexi Local is gonna comparatively offer a very good value if you have it in, you know, sort of continuous use, whereas the cloud Lexi more for sporadic events and occasional use can offer a better value because, you know, it's a software as a service instead of buying something to own. You know, that being said, in situations where the - just the most key issue is the data security, Lexi Local becomes the go-to.

In terms of caption quality, both products offer fairly similar capabilities for caption quality. Using the cloud-based Lexi gives a bit more capability for, you know, continuous learning growth and continuous updates of the model. But for Lexi Local, we offer a quarterly update channel, and that's included with the subscription, so the Lexi Local AI should also be able to improve, it’s just reliant on the customer to, you know, actually install an update stream to their local system.

AV610 CaptionPort

Pivoting a little bit now to another A/V application that's really interesting, we're going to talk about live display captioning. And this is most obviously relevant for an in-person event, something like trade shows, academic conferences, technology conferences, a corporate sales meeting.

There’s also applications we've seen where this is used in a streaming context where the output of the AV610, which is the product we’ll talk about, can be streamed also. It's an open caption product, however, so instead of the basic model we've been talking about with Falcon and with caption encoding, where it's closed captions and basically they're - they're hidden as data in the video stream and you won't see that data until an individual viewer presses the closed caption button and opts to see it, whereas the AV610 provides similar services, but for open captions.

So, in an open caption methodology, your output video is going to have captions, you know, from a character generator effectively burned into the video, and that can be either as an overlay like live closed captions, or you'll see there's a couple of options to do text-only displays, text with graphics, or text in its own part of the screen with a scaled video display.

So, the benefit of this is that in a place where viewers are present and you just want to have a screen that shows closed caption data or open caption data, really, and you want that screen to have - you know, everyone that wants to see the text will, of course, look at the screen, and it's not, you know, going to provide really any type of interference generally to any viewers that aren't really reading the text and are just following the event from the audio program and from the live visuals, you know, that's - that's, of course, great.

But basically, the 610 allows you to create a more customized display that kind of moves beyond what would be the basic display that you see with closed captions and gives the producer a lot more flexibility to decide how the captions are going to look, and to make them larger and clearer, as well as supporting, really, all languages and not just the languages that would be supported by a standard closed caption encoder, which have restrictions like we discussed in the Falcon section.

So, the 610 can take captions from any source of - any source of caption data that you would commonly use or think of. Any captioners that use iCap is supported, Lexi or any other software-based system that integrates with iCap can go right into a 610, the same as a closed caption encoder, and you can also feed it from any other source that you would have fed closed captioning systems from–so, a dial-up modem, text telnet, a SerialPort, a teleprompter, it’s really a closed caption encoder in terms of how you can input it, so you don't really need special software or special knowledge beyond closed caption knowledge to use the 610.

And you can see in the screenshot here an example of one of the common use cases, which is - you would have something like a PowerPoint slide that has, you know, text pretty much throughout the slides, same as the type of meeting we're doing now. And you can see that the captions, especially if the captions take up more of the screen than they typically do on Zoom, you know, to make the captions readable, you would have to sacrifice and cover more of the text to make them readable from a distance.

And the AV610 provides some options to get around that trade-off. With the option that’s shown, there is a built-in video scaler. So the video is proportionally shrunk on the screen to provide this outer bar that’s a safe space for closed captions to go so that they’re not interfering with the text presentation.

And especially, for example, if this was mounted on a large screen behind a speaker at an event, you could use this top space and then that's going to be very easily visible anywhere in the audience, you know, and makes only a small difference in the amount of space that's available for the slide.

AV610 Workflow (Scaled Video)

So when using the 610 with the SDI video input as discussed here, basically all you need to do is put in a full-screen, regular presentation, and what you get out is the scaled presentation and the captioned space can be put either on the top or on the bottom of the screen.

The captions in the audio, the way this works is it's the same as Falcon or the same as an iCap caption encoder, like the HD492. Here's another example of the types of ways that this is used.

For example, in - you know, again, this is a lower display because people are seated and it's a conference room. It kind of makes sense to use on the bottom of the screen instead of the top. But, again, that’s a simple configuration on the box. You can see what makes the most sense for certain application, what people respond best to.

AV610 Workflow (Text with Background)

Another new workflow for the 610–and we've just been sending out these updates recently–is the ability to make a much larger text display with simply just a static image, so using this workflow, you don't actually need to feed an input source of SDI video at all.

The 610 can generate its own output video signal from its internal processor and you will upload before the event something like a logo for your organization or your conference. And you'll send the program audio into the 610 using the audio-only XLR connector, and that can support either analog or AES digital audio.

And so the audio will be used to generate caption display–again, using iCap or any other mechanism that you want to get captions into the 610 through; could be Lexi Local, you know, a modem, could be anything–and what you get on the output is a scrolling text display, which can take up really almost all of the screen because, again, this is something where, you know, captions typically don't fill an entire screen, because on television, the closed caption viewer is also looking to, you know, see the visual part of the screen. They're not - they're not looking to have the entire screen obstructed.

But for an A/V setup, you may actually be looking to have an entire screen of caption text, so using the AV610 this way, it's an SDI product but it's very inexpensive to get an SDI to HDMI converter through it, and you can essentially make a standard HDMI television into a portable and inexpensive way to just kind of have a setup at any kind of a conference room where there's a screen for caption text and, you know, the screen can be decorated a bit with your choice of logo but, essentially, what's going to really stand out is the text and, you know, for the visual part of the program, may be on a different screen or it may just be based on what’s happening on the stage or at the meeting table and may not really have any video component.

So to control that, all you need to do is upload an image; we take a bunch of formats, either JPEG or SVG, various vector graphic formats, and you can really choose how much of the video the image should take up, how you want the text alignment to look, you know, when some - some various settings with font, color, size, a lot more control at the production side than you would have over closed captions, especially in the streaming world where, you know, typically the closed captions, unfortunately a reality that you typically have to live with as a producer, is that the visual styling of the closed captions could be different depending on what browser an end viewer is using, depending on whether they’re viewing it on their phone and who makes their phone, there can be a lot of factors. The producer only gets so much control, but the AV610 provides you with a way to really produce a very crisp and controlled display of the accessibility text.

AV610 CaptionPort Features and Advancements

Finally, the 610 gives you a way to show languages that you just generally couldn't show if you were using a - you know, a closed caption decoder because there's just so few decoders out there that are able to work with any standard that supports non-Latin characters.

And so the 610, by rendering its own characters, gives you access to font libraries in really every major global language. So, while it can still be a challenge sometimes to actually find transcription services for these languages around the world, especially with advances in AI translation, it is possible to get that data into the 610, and now you actually have a way to display that data to your viewers and not have to worry about it sort of being lost in translation with the technology processes since, you know, most - most vendors of players or a set-top box are just not supporting the languages that are not primary languages, you know, in their zone.

Currently the 610s that have been shipping for the past year, they max out at 1080p resolution. It's a 3 Gbps SDI signal. By the end of the summer, we're going to begin shipments on an option to get a 12 Gbps SDI input and output, and so that will give you a native 4K UHD render for the output, so that's something that can really make a difference, you know, if you’re talking about something where there might be a - a desire to display the captions on a very large screen on a, you know, on a large stage or something for a - you know, when it’s more than just a - you know, because you're not going to see the 4K if it's a consumer monitor and someone is seated a ways away from it, but then for a large screen that's really key, and so for this application even more than for broadcasting, we've definitely seen a lot of interest in 4K product, and please get in touch if you're interested in receiving one of those soon.

Recap

So that is what we have for today in terms of our featured products. We’ll have some time for a Q&A I believe, so that we can cover anything that, you know, might have been more from the last webinar that was more focused on, you know, exclusively closed captioning in a, you know, more traditional broadcasting and cable sense, and different technologies for that.

But I think it's really good to have been able to devote some time to talking specifically about our A/V products here and how that's also an important part of the vision with IP video, accessibility, and AI that we've been working on at EEG.

Traditional broadcasting has actually been very, very well-covered for closed captioning for a long time and, you know, there are always improvements that can be made and coverage of individual segments and making the service more economical and and higher-quality but, you know, it's been legally mandated for a long time, whereas in A/V and in events and video conferencing, I think these are growth fields generally, but especially growth fields for accessibility and for global reach, things like translation, and so I think we’re really just - you know, as events producers, our customers are kind of only at the tip of the iceberg right now in terms of how many of these events could be captioned as more economical, high-quality practical solutions become available. And so that's something that’s very exciting to be a part of.

Q&A

So, Regina is going to help manage our Q&A and I’ll turn it over to her. Thanks everybody for listening.

Regina: Yeah, so we will now be answering questions, so if you haven't already done so, please feel free to enter your question into the Q&A tool at the bottom of your Zoom window. So the first question is asking how the captions are being populated in this webinar, and if you can explain the signal flow.

Bill: Yes, so it is - it is using Lexi and Falcon. It uses the HTTP variant of Falcon, so it's a slightly different set of menus than the ones that we had in the screenshots earlier, but it's not a lot different because, essentially, you provide your output URL, you know, for the Zoom meeting, and each Zoom meeting has its unique URL and, you know, you can - the basic Zoom documentation will show you how to get to those URLs but, basically, as long as you have the correct permissions as a host or panelist on the event, you can go to the CC button and pick up that URL. And so Falcon provides the captions there.

You know, you - you have an Access Code for the Falcon that then is linked to that URL, and you give that Access Code to Lexi, so Lexi then just needs a source of audio for the captions and for that, we have a Windows tool that we use in a couple of these meeting type of contexts; it's called iCap Webcast Audio. It's actually a free download to use with iCap products, including Falcon. And basically, we take the audio from the Zoom meeting and uplink that through this Windows tool to - to the Falcon so, essentially, an iCap captioner–in this case that's Lexi, but it could also be a human captioner–they'll open up the iCap program, they’ll hear the audio, and they'll be able to send a transcript and the transcript will be forwarded to the Zoom URL and from there, the viewers see it when they open the closed caption button.

Regina: Can SRT files be taken from Falcon for post-production editing?

Bill: Yes, that's definitely whenever you use Lexi, you'll have a - you’ll have a file that you can archive from a Lexi job. With Falcon, I believe you need to go and - you need to go and request an account on the iCap Archive system to go get that data.

But yeah, in theory your data there, unless you ask for it not to be archived as part of your Falcons, will be available from the iCap Archive tool, and you go in with your login and - you know, essentially, you would need to say what the begin time and the end time of the caption data you were looking for is. The export from that is an SCC file natively, but on EEG Cloud, which is the same website that hosts Lexi and Falcon, there is also a free converter and SRT, as well as TTML or VTT, are included in the types of output styles that you can get from the converter.

Regina: And what is the maximum I/O for Lexi local in terms of a scalable network?

Bill: Well, we’ve been - we’ve tested that and have been promoting it as a solution for up to 10 simultaneous encoder channels. If the question was really surrounding bandwidth, I’d have to do some math on that. I mean, you know, keep in mind we're not transmitting anything like full-resolution video over these networks. What we're sending is the compressed encrypted iCap audio data back and forth between the Lexi Local server and the individual caption encoders, and sending back captioning text and formatting data, so the individual streams are not very high bandwidth. If we were thinking about a total network I/O bandwidth, say, for 10 channels, the ballpark you might be talking about 5-10 Mbps of actual network traffic.

Regina: So I thought this one was interesting. From your experience, which of the ADA compliance caption features are the ones most necessary for those that depend on them to communicate, and if there - asking if there are mandatory ones versus just nice to have.

Bill: Well, so, maybe the question is best answered, you know, kind of referring to the FCC requirements because, you know, I'm a technology guy and not totally giving a lawyer's opinion on this, but my basic understanding of that as it applies to caption products is that, while FCC requirements for captioning on covered types of communications, like broadcasting and cable, and kind of a relatively small subset of online video, are quite specific about what captions should be like, ADA is quite a bit broader. You know, ADA says that, you know, videos need to be - videos need to be accessible, and ADA covers many, many more types of video and many, many more situations than the FCC has jurisdiction over.

But comparatively, the guidelines for how - how ADA applies to something like, let's say, a municipal meeting or a business conference, are not always so clear. You’re required to provide equal access, but precisely, you know, what are you required to do? Where is the line? Unfortunately, I think that some guidance exists on those issues, but in terms of real, official, legal guidelines, I mean, unfortunately a common complaint among people who are attempting in good faith to comply is that what they've been asked to do is somewhat vague.

So what the FCC will ask you to do for live video is, essentially, provide captioning that’s as accurate as possible, provide captioning that has as little delay as possible, provide captioning that doesn't obscure on a video screen any other critical elements for understanding the video–that’s a closed caption-focused point, but also valid when doing open captioning–and to provide captioning that's complete, meaning that essentially it - you know, it doesn’t - it covers the program from beginning to end, it doesn't only cover part of the program, etc.

And there’s also things that a web player for FCC compliance is supposed to do, like provide control over the color and the style for viewers. That's something that, unfortunately, most web players that are commonly used actually do not support that.

But, so that's a set of guidelines that can be a help, and I think it really just - it really, as far as ADA concerns, can just vary a lot from event to event, you know, what the best thing to do is and, you know, some - some events are sort of lucky enough to really have some detailed outreach in that area. You know, other times people are, of course, just trying to do the best they can with what they have.

But so, you know, there’s a legal aspect and a technical aspect to that question–I’ll try not to belabor it too much, but maybe that's a little bit of guidance that’s helpful.

Regina: We had a question asking about the delay between the original audio and audio received by captioners through Falcon and iCap.

Bill: Yeah, that - the whole design of the iCap system is surrounding the issue of keeping those delays as small as possible, so any captioner, you know, humans - humans or an automatic system, is going to need to hear a couple of syllables–more likely a couple of words–to really product a translation where you know what’s being said.

So typically, your minimum delay for that process is going to be something like three seconds, you know, most optimistically. And, you know, so our goal is really to make sure that the technology process is adding to that as little as possible.

And typically with iCap, your delay getting the audio point to point is going to be very, very little on top of your basic, you know, ping time-style traffic delay over the internet, so if you figure that it could take you something like 100 milliseconds to communicate with a server on iCap and, you know, we are keeping the delay of the frame sizes are very small, so we're keeping that delay to maybe another 50 milliseconds on top of that. So getting audio to the writer within about 200 milliseconds is, in most cases, you know, completely achievable and is what iCap’s gonna do.

So, you know, your end to end audio-to-text delay might be more in that 3-5 seconds kind of time frame, but the contribution of that from technology is really pretty minimal–it’s almost entirely the actual transcription time.

Regina: I got a couple questions about the AV610. First, can the AV610 display multiple languages at the same time?

Bill: No, it does not currently do that. You know, if you were going to put them on two different screens, you could clearly use two 610 products. We do not currently support with that doing two languages. It’s a cool idea.

Regina: And with the AV610, can you have the captioning overlaid over still or live video, as opposed - as opposed to above or below the presentation area?

Bill: Yes, and that's - that's a - for example, an HD492 closed caption encoder also has a built-in SDI decoder that will provide more television closed captioned-style overlay, and the AV610 does also have that feature, so we focused a little bit more on the things the 610 does that normal closed caption decoders can’t do. But - but yes, it also has the basic closed caption, you know, overlay, over-the-screen kind of display.

Regina: Someone’s asking if Falcon can do both 608 embedded or open captions.

Bill: Falcon does not do open captions. Falcon can do 608 embedded and Falcon can also place captions into the RTMP stream in the form of queue points what, you know, the on-text data or on-caption info queue points that some systems expect, and Falcon can do the HTTP uplink, as we discussed, with Zoom. And again, our next roadmap release is going to include the - the VTT text track feature, but Falcon actually does not currently provide a burn-in for the captions, you know, like an open caption feature like the 610 does. You can, of course, stream the output of the 610 if you’d love to see that.

Regina: Alright, and it looks like we have time for one more question. So someone is asking how fast we can retrieve the SRT file once a live caption finishes.

Bill: The data there is - the data there is virtually instantaneous. You know, I might say that, you know, to account for all forms of delay, it might be prudent to wait, say, one minute, but - but effectively, the data - it's as-run data, it's not additionally processed, and the as-run data is available pretty much immediately. So, of course, a common application is to go through that data and make some corrections and, you know, making corrections will take a bit of time, but the data should be available, you know, essentially up to the minute.

Regina: Alright. Well, that completes the Q&A session of this webinar. So, I'd like to thank everybody for joining us today. If you have any questions, you can reach out to me directly at reginav@eegent.com.

Bill: And thank you for anyone who was here last week. I mean, we got a few really, really nice letters of feedback, some thoughtful questions, so thank you for that. Keep ‘em rolling.

Regina: Yeah, thank you so much! Hope you all take care and hope you join us for the next webinar in three weeks.

Bill: Let us know what you’d like to see.

Regina: Thank you.