EEG Video continued its popular series of Zoom webinars in May, educating media professionals about our full range of closed captioning innovations.

Best Practices for Closed Captioning and Broadcasting • May 21, 2020

During this well-attended online event, John Voorheis, Director of Sales for EEG, took the audience through a guided tour of EEG solutions built for broadcasting, entertainment media, and more. This essential webinar covers:

-

All about EEG’s closed captioning solutions for broadcasters

-

How you can use our products for your captioning needs and workflows

-

The latest closed captioning advancements at EEG

-

A live Q&A session

Featured EEG solutions included:

This was our second webinar to cover Best Practices for Closed Captioning and Broadcasting. Visit here to experience the first installment on this topic, which originally streamed on April 24, 2020.

To find out about upcoming EEG webinars, as well as all other previously streamed installments, visit here!

Transcript

Regina: Hi everyone, and thank you so much for joining us today for today's webinar, Best Practices for Closed Captioning and Broadcasting. My name is Regina Vilenskaya and I'm the marketing content specialist here at EEG. Today I will be joined by John Voorheis, EEG's director of sales. John has been with EEG for four years now and has extensive experience in technical sales. For the Q&A portion of the webinar, we will also be joined by Bill McLaughlin, the VP of product development.

So for today's webinar, John will be going over who we are and what EEG does, the EEG solutions built for broadcasting, entertainment media, and more, and he'll be going over the latest products and features. And during the webinar if you have any questions about any of our solutions, you can enter those into the Q&A tool at the bottom of the Zoom window. At the end of the webinar we will answer as many questions as we can.

So that covers everything that I wanted to start us off with, so I'm now going to welcome John to kick us off with the webinar Best Practices for Closed Captioning and Broadcasting. Welcome, John!

John: Hey everybody, how are you? Thanks for - thanks for joining us today. Hope everybody's staying well amidst, you know, everything - everything that's going on. So yeah, really great to be here. Really, really excited to talk a little bit with you all today about EEG's closed captioning solutions for broadcasting.

And a little bit of background on EEG, we were founded in the early 1980s, I think around 1982 or '83. Bill can probably give you an exact date at the end, but we were founded as Electrical Engineering Group, you know, as EEG and that's where the EEG comes from and, you know, and recently we've kind of started to use EEG Video a little bit more. But we've been involved - really the key point here is we've been involved in providing accessibility solutions really since - since our onset as a company back in the early 1980s.

When I - when I first started working here in 2016 I was, you know, I was really excited to learn that EEG had actually worked with PBS, with the Public Broadcasting System, to develop the 608 standard data standard for North American closed captioning, so you really - you know, wouldn't - you know, you wouldn't have closed captioning if it weren't for EEG would be one way of looking at that. But this has been in our DNA for a long time, you know, in terms of video accessibility, closed captioning, data insertion, I mean, it's it's really what we do.

So, you know, really great that you all are here today and just on this slide, this shows a couple of our core competencies, some of which we'll get into today. You know, iCap Alta - iCap is EEG's proprietary protocol, you know, for transporting audio to captioners and then receiving caption data in our encoding solutions. Right now we caption about 5 million minutes a month live through that system so, you know, definitely some really, really extensive usage there, which is great. Alta is our encoding solution for IP video.

And you have Falcon, which we we really aren't going to get into today, but that's a virtual RTMP encoder for streaming. Lexi's our automated captioning system, which we will talk about, which we also have a local on-prem version of. Lexi's cloud-based as you can see from that cloud graphic, and then our AV610, which is a caption port. It's not actually technically an encoder. If you want to learn more about that, you know, next Tuesday at 2 PM would be a great time to tune in to our next webinar when we will be talking about that so, you know, gotta have a little bit of a cliffhanger here for closed captioning.

HD492 iCap Encoder

So moving on to the next slide here. The HD492 iCap encoder. So this is really EEG's flagship product. It's a dual in/dual out SDI encoder with a decoder output. This series - this series of encoders has been around for, you know, probably over 10 years. There's the HD480, 490, then the 491, and then the 492 is the current model. So yeah, that's a - that's our flagship SDI encoder. It is an iCap-enabled unit.

So how iCap works is the encoder actually–the HD492 or any iCap encoder, actually–extracts the audio from the video, sends it through an iCap server that EEG maintains to a live captioner or our Lexi - Lexi automated captioning service, and then the caption data is returned along that same pathway.

There's a lot of benefits to that architecture. It was really designed with security in mind first and foremost, because when you're connecting, you're maintaining a static connection to EEG's cloud-based iCap server, so that's just a static connection from your network and your encoder to the cloud server EEG maintains. And from there it's connected to the caption agency, so if you need to change your captioner for contractual reasons, availability reasons, like working with multiple caption agencies - you know, there's a whole slew of reasons why, you know, you might need to do something like that, you're maintaining a static connection to that server. So it's - it's really kind of just a set-it-and-forget-it scenario which, you know, there's so much changing every day that it's nice to have those sorts of options when they're available.

492 features a modem, as well as a failover we actually still have. You know, you'd be surprised there's quite a few people who do still use modems to connect to captioners, and that's okay, we understand. We'd definitely like to get you all on iCap. You know, I can schedule some time to talk to any of you about the benefits of that, but it does offer that modem option which many find to be useful as a failover if not, you know, a primary source of connectivity.

Also have a serial RS-232 cable connection that can be used to source caption data from a teleprompter or from an onsite captioner, and you can also use, you know, unencrypted plaintext TCP/IP telnet so, you know, that might be appropriate if you have a captioner somewhere on your internal network or something like that who wants to connect to the encoder. So those are all great options that the 492 has.

It's also able to connect to Lexi, which is our automatic captioning service. Actually the 491 and the 492 are both able to connect to Lexi, and there's a number of settings - there's a number of additional modules for the 492 that can be added on, you know, that, you know, might allow you to record captions through the encoder or either through iCap so you can, you know, download the caption file, you know, really almost immediately after a broadcast so you have that for repurposing the content.

And we actually also just for those of you who might have a need for this, we have a free caption file conversion tool at eegcloud.tv, so if you don't already have an EEG Cloud account and you are using iCap, you know, that's a - that's a great way to get started right there. So our cloud services, such as Lexi, so if you do need to convert any caption files, that's something we have available at no cost at eegcloud.tv, so be sure to sign up for an account there if you have any sort of file conversion needs.

iCap Workflow and Integration Advantages

So iCap workflow integrations and advantages. You know, I feel like I've kind of been talking about that all along, but we really have a robust network of iCap captioners. There is a list available; if you type in, you can find EEG iCap captioners by keying that in Google. I do that all the time to send folks such as yourselves links to what captioners are part of the iCap network. And that isn't an inclusive list, but it's a very good list, you know, and one of the great advantages of iCap is it's a service we provide at no cost or it's a software download, rather, we provide at no cost to the captioners so, you know, that's - that's one thing. If you have a captioner you're working with, you're really happy with and you want to, you know, introduce them to iCap it's no cost. It's honestly, really - they would just need to contact EEG to get that download and it's really an email or a phone call they're happy to make because if there's one thing I've learned, you know, if there's anybody who loves iCap, you know, as much as broadcasters, it's the captioners. It's a really straightforward process in there and they can't get locked out.

You know, there's a monitor for broadcasters to see who's logged in at all times. There's logging as to who is transmitting data to the encoder. You can also have access, of course, to Lexi as a failover or, you know, as, you know, a last minute resort, or even as a standalone solution. We can talk about all those options.

We also offer through iCap the ability to translate into multiple languages and, of course, as I was saying, the ability to create caption files, and you can do that either through a CC Record module we have available on the HD492 which just records it in the encoder, all caption data going through it and stores that I believe for six months, or using our cloud services, eegcloud.tv. We have a feature known as CCArchive. You can just select the dropdown menu and pull a caption file from time and date window, so it's super useful if you're looking to repurpose live content that's been sent on-air, you know, for rebroadcast for streaming, for VOD purposes, you can just pull that caption file immediately, so that's - that's really useful.

HD492 iCap Encoder Features and Advancements

So, you know, here are some of those features I've just mentioned, you know. So what's another exciting feature of the 492 as well, of course, you could use SCTE-104 VANC insertion, so you can search ad triggers, ad segmentation via this encoder without relying on a separate unit, which we do also offer so, you know, definitely ask about that if you have any SCTE insertion needs. But there is a module available for the 492, so you can really kind of keep your rack nice and clean and not take up too much space with this stuff, so that's - that's really useful as well.

And we also as of - as of NAB last year–and this was really exciting I thought, and I think a lot a - lot of people did here at EEG and hopefully you all did as well–we released the 2110 module for the HD492. So what that does is, essentially, it allows you to insert rack data into a parallel IP video workflow, so to an extent it's really, you know, almost future-proof, the HD492 encoder. Sorry about that. It's future-proof, the HD492 encoder, and if you're working, you know, primarily in SDI video but you're starting to play around with IP video and you might be sending some of that out to air, or you want to see how captions would work with, you know, your other gear, it's a great solution because you can have both. So that's something we're really excited about. Definitely have, you know, some of those out in the field and it's pretty exciting.

This fall, kind of the big news is we are releasing 12G SDI 4K for the HD492, and there are some options for doing that in the present form, you know, and all the frame sync and some things like that - I was actually just discussing that earlier today, and if you have questions about how you can use a 492 in its existing form to caption in 4K, you know, we'd be happy to answer those, or Bill can probably address that. But we're definitely releasing a full 4K version this autumn, so that's going to be very exciting.

Alta Software Caption Encoder

So, you know, I think that's really a good segue in terms of talking about, you know, 2110 and how that encoder's been future-proofed for IP video to talk about, you know, that transition from SDI to IP video and, you know, how we've done that is, of course, with our Alta software closed caption encoder.

And one thing I think I've seen a lot of in 2020–we all have–is we've been seeing a lot more inquiries and a lot more deployments of Alta here at EEG so it seems like, you know, at long last IP video is really starting to gain some more widespread adoption, which is definitely exciting, you know, for all of us here and as an industry in general as you kind of move on to this next standard. So, you know, it's definitely exciting to kind of see, you know, amidst all the sort of chaos we've seen in 2020, it is nice to see that we're moving on to a next-gen video standard. It is, you know, always nice to see progress in all its forms, so that's - that's really exciting.

But with Alta, you know, I know Bill and some of our other folks here at EEG, they've been very active in JT-NM events in terms of the interops, as well as participating in SMPTE standards meetings and so on, so this is - this is technology that, you know, we're very heavily invested in and we're really interested in, you know, continuing iCap into IP video. I anticipate Alta will be every bit the gold standard for closed caption encoding as the HD492. And, you know, I'd like to think it already is for a lot of folks who are doing IP video. But, you know, more on the product itself, it is - it does work with both MPEG transport streams and 2110.

Alta MPEG-TS Workflow

So here you have MPEG - the MPEG transport stream workflow where it's an input into your Alta and, just like in a hardware encoder, the compressed audio - the encrypted audio passes through iCap to a transcription facility or to Lexi, and then the real-time captions go back where they're embedded as 708 captions with DVB teletext or DVB subtitles, and they go out for live broadcast. So Alta itself, it is a software product.

We do offer it, you know, for sale on service that we configure so that, you know, comes of something of a plug-and-play solution. It can also be installed on VM or on cloud service provider so, you know, again any questions around that would be great to direct to Bill towards the end. But we definitely have a full range of options for how you can deploy Alta into your IP video workflow.

Alta MPEG-TS Features and Advancements

Now some of the big advancements we've made here is, you know, we've expanded the formats, which we support without Alta. We're always looking at supporting more formats. I was on a Slack discussion just yesterday or today–the days are running together a little bit bit for me these days, as I'm sure they are for all of you– but just recently this week we were looking at, you know, another video standard support in Alta. So like I said, this is stuff that we're really engaged in, in terms of looking and improving these products and, you know, Bill and his team, it's really a great group we have here and, you know, we're constantly looking at improving these products and, you know, what's next, which is great, I think, for, you know, not only us as an organization but also for you, our customers and prospective customers.

But, you know, we've seen some growth in, you know, the type of formats we support. We now support HEVC embedded video, which is perceptibly lossless video as I understand it. DVB subtitles, so support for the European - European closed caption subtitle standards, as well as SMPTE 2038/ASPEN. And with Alta - included in Alta is the ability to, of course, do SCTE-35 insertion so, again, included not even as an additional module, but with that license you have the ability to do SCTE-35 insertion for ad triggers, ad segment - ad segmentation, etc.

Alta 2110 Workflow

And, you know, here's this chart again, and this is for a 2110 workflow where, of course, your audio, your data, and your video streams are all coming in separately and they're time-synced through a P2P controller. In the center you see the web interface where you can manage all your Alta channels and, again, this is sent, you know, via the iCap server, either to Lexi to a transcription facility. The captions come back, they're embedded, and you have an output with ancillary caption data embedded.

You're probably thinking you've seen the chart two or three or four times and it looks, you know, kind of the same. And that's really the idea is - because that's what I've heard from a lot of customers is that, you know, EEG, it just - it just works. It's just easy. You set it and forget it, and that's really the idea. There's certainly enough to worry about, especially these days, but there's certainly plenty to worry about and you don't want closed captions to be one of them.

You know, I was at, you know, a major - major private university here on the East Coast. It's actually in North Carolina, and somebody had said to me, "You know, with EEG, you know it just works. That's all I have to say about it, is it just works." And that's kind of the idea with iCap, is that, you know, you set it up, you set up your firewall, and it just works. Any changes to the captioners occur at that sort of level. So really, it just works, and that's the idea. We don't - we worry about closed captioning so you don't have to.

Alta 2110 Features and Advancements

We've also, you know, recently with an Alta Network Gateway, we have - we have a server that we can ship configured with up to 10 channels of SMPTE 2110 audio and 2110-40 ancillary data simultaneously so, you know, a very dense solution. It's also integrated with the NMOS Management API, so you can manage your Alta server, as well as your 2110 products from all different vendors so, you know, that's - that's really huge in terms of simplicity for users and, again, as I've been saying, simplicity is really what we all want in closed captions.

This is just a setup screen of the Alta Network Gateway and then here is the NMOS Management API in which you can manage all of your Alta channels. So, you know, very convenient for our users and that - in that regard, and flexible, so lots of options there.

Lexi Automatic Captioning

And in terms of flexibility, I don't really think any iCap - any discussion of flexibility with closed captioning would be complete without talk about automated captioning and, you know, I want to preface this by saying, you know, I think - I think live closed captioners, human closed captioners, CART transcriptionists, they continue to be the gold standard for closed captioning. But, you know, at the same time, they're not feasible for every situation. You may simply not have someone available, you may not be able to, you know, have someone in the facility. Security clearance reasons, as you know, might be the case with Lex Local, you know, it may not be cost - you may not be under mandate to caption certain things, but your viewers, you know, strongly prefer closed captions so, you know, to kind of meet - you know, meet that requirement of your viewers, that might not be, like, an FCC requirement. You might be looking for a captioning solution as well but, you know, you might not, you know, want to necessarily budget that amount - that amount of operational expenditure.

So, you know, there's a lot of scenarios with Lexi can come into play, so I'm gonna talk about a little bit about that. Lexi's our automatic captioning service. It's definitely a really mature, robust solution we released my first NAB here at EEG back in 2017. And, you know, I work in our Brooklyn office, you know, with about a dozen developers who live and breathe this stuff day in, day out trying to improve Lexi and improving Lexi–not really trying. It's come - it's come so far in three years, really, but right now we support English, Spanish, and French. You know, the three - three languages which are under some sort of mandate here in North America. And we slate about a 90% accuracy level out of the box. And we really have - you know, we have quite a few customers using this across all verticals–you know, a lot of broadcasters, a lot of state/local governments, some elearning, you know, some corporate applications, a lot of really interesting use cases for ASR technology, which is exciting to see.

Lexi Workflow

And again, here's this workflow, and doesn't this look familiar? Because it's really, you know, all a very similar workflow. The video source goes into the EEG encoder. The audio passes through iCap to Lexi, comes back to the encoder, and then you have a caption output. It's really simple. We try to make it that way. You know, I think some of our support people might differ from my assessment but, you know, nonetheless, we do try to make this a simple process and I think we've - we've largely been extremely successful in making captioning as simple as possible for you all.

Lexi Local

So we also have a Lexi Local solution and this is typically - this was really designed in response to requests for an on-prem solution for corporate applications where you might be discussing financials, product releases, or even, like, sensitive health data or something like that. You know, just non-public data that may be severe restrictions around in terms of it even passing over the cloud, no matter how secure the protocol. So that was what this was designed for and Lexi Local is basically Lexi in a 1RU unit, similar in form - almost identical in form to the HD492, but it doesn't require internet connectivity to operate.

We release updates that, you know, you would connect to the internet to download and then apply, you know, to, you know, update the speech recognition, but it doesn't require connectivity to caption. You can actually - you can connect multiple encoders to a single unit and you can have multiple instances of Lexi Local on that single RU unit but per instance of Lexi Local, we licensed it, you know, on an unlimited use basis. So per instance of Lexi Local, you could really caption 24/7 so, you know, unlike - it could in certain high usage scenarios, you know, if you're captioning, you know, probably in excess of 14 hours a day, off the top of my head, but somewhere, you know, we're looking at using an automated solution and really extensively from a cost perspective, Lexi Local could make sense for you because of that unmetered usage. So, you know, definitely shoot an email to sales@eegent.com if you have any questions about Lexi - Lexi pricing.

And this is fully compatible with, you know, of course all EEG - all current EEG encoders. And as opposed to the cloud model which, you know, in terms of usage, it's - it's billed on a per-hour basis, whereas Lexi Local's unlimited. So that's why I say Lexi Local could offer some cost benefits for those of you who might be doing some extensive live closed captioning.

Lexi Automatic Captioning Features

One of the - one of the really exciting features about Lexi is our Topic Models. You know, we'll talk about those, we'll talk about Lexi Vision, then our iCap Translate where we open this up to questions. But our Topic Models are really how you program Lexi with vocabulary. So at one point you could actually enter a URL, I think you still can. We may have - we also allow you to upload lists of names, places, things like that, just lists of text. And you can even upload individual words, you know, that may have, you know, may be particularly difficult to pronounce for, you know, either humans or, you know, speech recognition - speech recognition you know, Mississauga, Ontario is an example I always give anyone from our friends to the north in the audience.

But the Topic Models are really useful in kind of getting that baseline of accuracy from 90% up to above 95% even so, you know, it's a really powerful tool there and to a large extent, you know, you can - you can program different custom Topic Models for different content and only have one after that once. But you can have a news Topic Model, a sports Topic Model, etc. And those are all, you know, the types of folks that we work with using Lexi, as I think I said before. You know, we have, you know, well over 100 users at this point, you know, all happily using Lexi, so it's really exciting to, you know, kind of see how this technology's been able to improve accessibility in so many different scenarios.

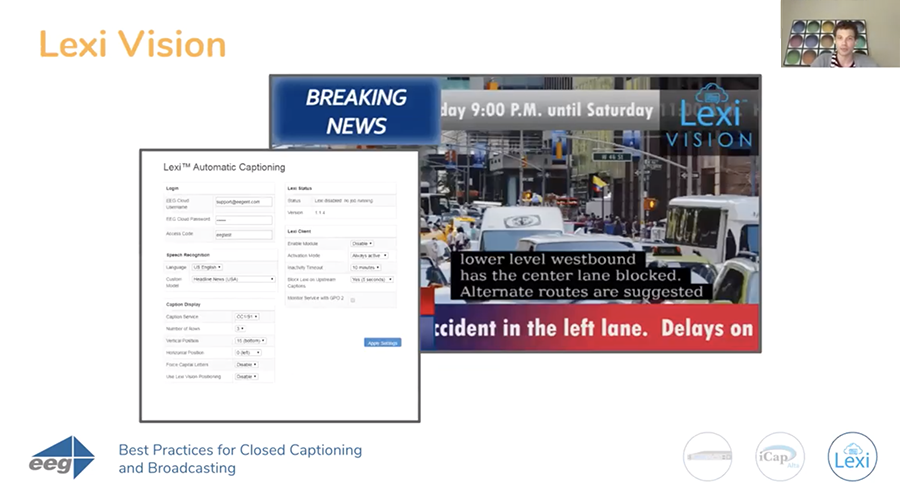

With Lexi Vision, it actually analyzes the on-screen graphics so the captions don't obstruct those. You know, typically, you know, caption placement has been left in the hands of the captioner but, you know, it's when the captioner is a human, so it's only natural that, you know, AI does the same thing, and I believe this can even be configured to not obstruct faces so, you know, if you have people who are also relying on lip-reading, supplement closed captioning can be very useful for them. You know, you may also have some advertisements on the bottom of your screen or something like that in the context of sports when those eventually return, you know, or just graphics you don't want to obstruct. So definitely, you know, some really useful applications there.

And then finally we have iCap Translate, which is really exciting because it allows you to caption in multiple languages by contextually analyzing sentences from iCap and that caption data, and then translating it into another language. You know, this definitely works with English, French, Spanish, German, several others. Definitely can send you some information on that on request. If you have any questions about what languages we support with iCap Translate, please type them into the text box, but yeah, it's - it's an excellent service and, you know, with the HD492 since you have those two video outputs, you can actually encode in two languages simultaneously, so you're using CC1 and CC2, so it's a great way to make use of that encoder, as well, using iCap Translate.

Lexi Automatic Captioning Advancements

So, you know, as I was saying, you know, again with the features and advancements for Lexi automatic captioning, we're constantly working to update ASR models to improve, you know, the abilities therein and, you know, get that accuracy as high as possible. Like I said, 95% is very achievable, you know, under the right circumstances and circumstances in which, you know, speech recognition to dry, clean, single-speaker audio, minimal background noise, you know, people not arguing, you know, single- speaker audio, so it's - you can definitely get that accuracy percentage very, very high up there, even in the excess of 95%, but that's just a matter of custom Topic Models and, you know, we put work into them so you can put work into them and, you know, with that you can really improve accuracy for that.

Recap

So just to recap, you know, it's really an exciting time right now because we're at the intersection of AI captioning, IP video, and SDI. With 492, you know, that could work with IP video, as well as with SDI. We've introduced - you know, Alta is a very mature solution at this point, you know. It's deployed rather extensively and, you know, it seems like with each passing quarter we're seeing more and more deployments of that, which is exciting.

And finally, you know, Lexi and iCap Translate, which leverage AI and speech recognition technology to deliver captions and accessibility in multiple languages. So definitely a really exciting time in broadcast, you know, and in closed captioning.

So guys, I really appreciate you taking the time on this - on this Thursday to join us today to talk about best practices for closed captioning and broadcasts. We will be giving another webinar next Tuesday at 2 PM so, you know, if you guys want to - want to come check that out and see some applications for A/V and so on, definitely would love to have you there.

Q&A

And at this point I'd like to open up the forum for any questions, you know. Thanks to Bill and Regina for joining me today. Thank you, Regina, for organizing this. I know you - I know you'd love to take any questions now so, you know, I'd like to open up the floor. And thanks so much everybody. It's been really a pleasure speaking to you all today and yeah, thank you so much.

Regina: Thank you so much, John. Yeah, so we've now reached the Q&A portion of the webinar. So if you have any questions and you haven't already done so, you can enter those into the Q&A tool at the bottom of your Zoom window.

So the first question that we have here is, can Alta encode into an HLS stream with multiple bitrates?

Bill: Yeah - well, John disappeared. I guess I'm answering this one, but, so Alta basically will

work on a transport stream that is a single transport stream, so typically you would insert the captioning in H.264 in Alta and you would do that upstream of components that we're going to - to essentially do things like live segmentation of the stream for HLS delivery or for multiple bitrates.

Now, if you have transport streams that show the same content already in multiple bitrates and that's how they're being delivered for captioning, what you can do is you can set up multiple Alta channels. Each one of them is going to have an independent input stream and independent output stream, and you can tie these encoders together with iCap.

So you can have a multi-encoder iCap Access Code and that'll deliver the same captions into, you know, all the different bitrate streams. So to answer the question, you can do that. It requires multiple Alta channel licenses for each bitrate and, most commonly, the bitrate conversion is done downstream of the captioning. But if you have multiple streams that need captioning of different bitrates, you can do that with a single source of captioning and with multiple channels.

Regina: Someone is asking if the webinar will be available afterwards. So yes, this webinar is being recorded right now. Within the next few days, everybody who registered for the webinar will receive an email once the recording is available for viewing.

So Karl had actually commended John on the quote, "We worry about closed captioning so you don't have to," which I think is great to share how our solutions resonate with customers. But Karl is also asking to tell everyone more about Lexi Vision.

Bill: Yeah, so Lexi Vision uses the iCap video feature, which most of the newer encoders have an ability to do. And it uploads a relatively low resolution, low frame rate copy of the video signal into the encoder to iCap, and that's been being used in some installations for caption positioning, you know, by human captioners for probably five years that feature's been out.

With Lexi vision, we're actually able to use image recognition AI that finds places on the screen where there's text graphics, where there's a lot of busy action on the screen, where kind of the center of the action with people's faces is, and these entities are recognized by the image recognition and a caption position is chosen to avoid these, and so it will go either to the bottom or the top of the screen, and can, you know, hedge in a little bit to the left or cut off a little earlier on the right to avoid your on-screen graphics, and to do that dynamically, because historically with - with most systems with automatic captioning where the teleprompter–or even with a lot of human captioners who don't have access to a video feed–you know, the traditional approach has been to say we're gonna choose all at the top or all at the bottom and we're just gonna do that all the time and we're gonna hope that that remains a good position for the captioning all of the time, you know, on all of the content but sometimes - sometimes the content isn't produced in such a way that one position is the best all of the time.

And so the dynamic positioning kind of helps you avoid needing to make that choice and, you know, it's - it's important because that's actually, I mean, right up there with accuracy and with latency of captioning - that's in the FCC requirements for live captioning, that the captioning be put in a position where it obstructs as little of the important on-screen action as possible so, you know, that's a - kind of is a first-order problem.

And with Lexi Vision we have, I think, kind of the best solution that's out there to actually manage that without asking anything new of the graphics production. You know, we're not saying, "Oh, you have to do graphics a certain way." You know, the captioning can actually adapt to what you want to do with the graphics.

Regina: And we just have a follow-up question from Karl to - asking how to install and activate Lexi Vision. So if you can just speak a little bit to that.

Bill: Yeah, so there's two different steps, basically, on the - assuming we're talking about, say, a 492, an SDI encoder, you - on the iCap page on the config site, there is a setting for providing permission to upload the video in iCap, so you have to make sure that that is checked on each individual encoder that you're using. And then once that's checked on the encoder, you can also check Use Lexi Vision when you're starting a Lexi job, either on the startup page at eegcloud.tv on the website or in your encoder if you're automating the jobs from, say, a GPI on the encoder, then the job settings are also located in the Lexi tab on that encoder.

So essentially, for your preset for a certain GPI, you tell it to use Lexi Vision feature. And if the encoder hasn't been - this feature is fairly new–several months–so if the encoder hasn't been on a recent build of software, in that time you may have to upgrade the software build on the encoder to see the new options. But with an updated encoder they should all just be there.

Regina: We have a couple questions from Gregory about Lexi Local, first asking if Lexi Local is a separate appliance and, if so, if they can add it to the 492's that they have installed to bring captioning inside the organization versus to iCap.

John: Yes and yes.

Regina: Alright, and -

John: Bill, you can elaborate on that if you like, but no, that's affirmative.

Bill: Yeah, absolutely. It's a standalone appliance that can support up to 10 different encoders attached to the same - to the same server. You know, it basically - it's a one rack unit server with a little LCD display to set up the basic IP settings and start communicating with it so it, in kind of form factor, it's very similar to another one of the encoders and, you know, the only real - you know, the only real, like, kind of asterisk or thing to understand with, you know, if you have existing encoders on iCap and being interested in using them with Lexi Local is that the iCap resource in the encoder needs to point it at a given time, either to the internal Lexi Local server or to iCap out on the cloud. So if you were ever going to be switching between these two operational modes, you do have to do a little bit of reconfiguration on the encoder to switch between the modes.

So an advantage of Lexi in the cloud is that, you know, you can interchange Lexi on the cloud with human captioners on the cloud, and you can kind of interchange that just on any kind of a daily schedule without making configuration changes from one source to the other in any kind of manual way. And with Lexi Local, if you ever did want to have the encoders also be on the cloud, that's a configuration change. So, you know, operationally a few minutes need to be allocated for that but, you know, yeah, if you were going with all Lexi Local then - then really, yeah, you can just take your existing encoder and redirect it there permanently and be set.

John: So follow-up question on that, Bill, for our audience. How many encoders could you have simultaneous–licensing permitting, I'll add that as a caveat–could you have captioning from one Lexi Local server? So if I wanted to - say I have multiple video feeds -

Bill: Per simultaneous encoder usage so if you were going to use - you know, if you had two encoders, using them in an alternating fashion on Lexi Local would - would be, you know, available for the base price. If you give us both at the same time on Lexi Local, there's an additional channel worth of licensing on that.

John: But you could - you could use up to 10 simultaneously from one Lexi Local server.

Bill: From one piece of hardware.

John: From one piece of hardware if you - if you purchased the appropriate licensing. And I think - I just think that's good to know for those in the audience who might be, you know, more widespread deployments, you know, with a single Lexi Local server, you know, with the appropriate licensing caption up to 10 simultaneous -

Bill: Exactly and it's called local, but really, you know, by "local" you need to understand that it's - it's not a cloud service, it's within the customer's enterprise network. So for example, there's nothing that would be stopping you from connecting to different offices that are already connected through a VPN or a wide area network. There's nothing stopping you from using the same Lexi Local server in multiple physical locations but they just need to be connected internally by the customer-supplied networking. It's not a hosted cloud service the way, say, a Falcon or iCap or Lexi on the cloud is a hosted service.

John: Exactly. I just thought that might be useful for some people to know, so thank you.

Regina: So we have a comment from Alan saying that they're looking for - that they're looking at - looking to livestream in Spanish, so could you speak to live streaming with Lexi, even though that we'll be talking a lot more about live streaming on Tuesday?

Bill: Well, I mean it's - Lexi does do Spanish, I mean, yet - we can either - that - again, Lexi would be any case where the spoken language of the audio track is the same as the language you want for captioning. So assuming that it's, you know, it's a Spanish audio track and you want Spanish, you just select Spanish when starting Lexi job and and you're good to go. The Spanish appears as the primary language captioning.

You know, iCap Translate that John mentioned is kind of the companion product to Lexi for a case where you want captioning in a language that is not the spoken language of the program, because - so, Lexi is effectively audio to captions whereas Translate is captions in one language to captions in another language. So it would be possible, for example, to - let's say you have a spoken program in Spanish. You could use Lexi to create Spanish captions on caption service 1 and then even additionally you could use Translate to also receive translated English captions on caption service 2. So, you know, the translated English captions at that point are derivative of the Spanish captions. You know, they'll be another second or two behind, but basically you could - that's how you would get multiple languages of text from a single spoken language would be to cascade the products and yeah. So you can - you can definitely get the Spanish to Spanish on that. You know, yeah, if - was there a question in there about streaming or -

Regina: Yeah, I mean, it - all it was just a comment about - "I'm looking at a live Spanish stream."

Bill: Yeah, so basically, it is - it is live and it is in Spanish. You know, as far - as far as, you know, stream goes, it's, you know, just basically for SDI. You would use something like the 492 product and, you know, if it was going to be - it was RTMP, I'd probably tend usually recommend the Falcon product and something like an MPEG transport stream that would be the author product as far as actually inserting captions into the video.

Regina: Alright, someone's also asking about the workflow between iCap Local and Lexi Local, and asking if you would need both of them.

Bill: So iCap Local is - is not really a separate product. It's really - it's a feature subset of the Lexi Local product and what iCap Local really refers to is the capability that you could have human captioners or any type of third-party human or automatic system that uses iCap. You can use iCap across a local network using this Lexi Local server, so the Lexi Local server replaces using public cloud servers hosted and maintained by EEG to connect to your caption agency, and you can basically have a captioner.

Let's say you have an office in California and you have an office - you have an office in New York. You can have a captioner who's inside your network at the office in California and they can use the Lexi Local system as their iCap server to receive audio from a meeting that's happening in the New York office and send the captions back. So iCap Local is just essentially the ability to use the Lexi Local product as a server for transcription sources that are not Lexi automatic.

Regina: OK, thank you. And then could you please talk about how Lexi functions in live sporting event scenarios and then maybe talk about in what scenarios you think that Lexi is - is the most accurate?

Bill: OK, yeah. I mean, sporting events, it can - it can vary a lot how the event is mic'ed and kind of what the - what the style of the commenter is, what number of commenters they have. Basically, sometimes a sports stream has a number of different what I'd call degrees of difficulty beyond, you know, the optimal automatic captioning performance because, you know, in kind of your worst-case scenario, you're dealing with a mix that has a lot of crowd noise and, you know, squeaking sneakers or race car engines, so a lot of background noise, and you're potentially dealing with multiple announcers who, you know, might, depending on the style of the broadcast, they might speak over each other a lot, they might get extremely excited and, you know, kind of - kind of start shouting, they might use a lot of -

John: Extended speech.

Bill: They might use a lot of jargon, they might be famous athletes speaking English as a second language from other countries. So there can sometimes be a lot of issues cascading on top of each other that - that make that a challenging program. You know, that being said, when fewer of those things are a problem, it really can do quite well in sports.

You know, I mean, for example, it's probably - you're probably going to get a better out-of-the-box result on golf than you are on NASCAR, you know, just because of the fact that that you have a relatively - the announcers tend to have a calmer performance, there tends to be less background noise, you know. Those - those things are all factors and, you know, so one thing that can sometimes be done depending on where you're sitting in the production chain is you can improve your results a lot if you're able to feed isolation microphones from the commenters into the system rather than the fully mixed program, including arena sound.

So - so that's definitely something that is - can be a challenge if you're receiving a completely packaged sports program from around the world, say, but when you have control over the production that can be a way to get much better automatic captioning results.

John: I will say, we have had some very good success captioning live sports. You know, one of our pretty significant users of Lexi–and there are several who use it for live sports–but one kind of uses it exclusively, you know, for - it's exclusively sports content, and they use Lexi quite extensively and they seem to have very favorable results. So it -

Bill: Yeah, it supports itself as a broad category. I mean, everything from live games of different kinds to, you know, to news and coaches shows and press conferences. So -

John: Yeah, and they do all of the above, so we've definitely had some really good success stories with sports, but a lot of it, as Bill said, depends on, you know, the microphone and also, you know, using custom Topic Models for jargon and player names and things like that. That can really help you kind of improve that baseline accuracy.

Regina: And then can you switch back and forth between Lexi Local and Lexi via the cloud easily?

Bill: Yeah, well like I was saying about that, it's - it doesn't switch between those two automatically. Is it easy? It's - it's relatively easy. You need to change the IP address field in the - in the iCap server that the encoder is pointing to. You can do that using some HTTP API automation or through a point-and-click on the browser. So it's - I think it's fair to say it's easy, you know, if you are doing programs kind of like straight back to back or something every day. But yeah, I mean, you probably, you know, it's one more thing to be moving around. And I think in that case, that can be a strong argument for using Lexi on the cloud instead because, at that point, you have no moving parts in terms of the encoder needing to connect to different IP services. But, you know, it can be done.

Regina: Is Lexi Local compatible with other makes of caption encoders?

Bill: It's compatible with some. They have to use the iCap protocol so, you know, for example, there's some automation kind of channel-in-a-box systems. Imagine Versio is probably the one we work with most frequently that has a built-in iCap driver, so anything like that is compatible with Lexi Local. For older units that only support a telnet connection in or a telnet modem connection, we do - there are sold-separately proxy products that can connect those units to iCap.

A lot of the units that are out there that have an - they have a telnet input ability and they don't have an audio output, so being that you need to also get audio associated with the channel into Lexi, that's why with an encoder that doesn't talk iCap, you - basically, you need a converter card that both will take in a source of audio to associate with the channel and then will interface the captions on the terminal encoder.

Regina: OK. And any plans for allowing us to run virtualized Lexi Local?

Bill: Maybe. I think that's that's something we've gotten some requests from. I mean, when we ship the server, that allows us to kinda put together something that works right out of the box, is easy to configure, you know, we ship - we ship VM-based products for our Alta systems, and that definitely helps a lot of customers with with very efficient workflows once they get it running, but the the setup on the virtual machines as - I'll tell you it's generally a lot more complicated than when a turnkey system is shipped, so - so that's a factor as we build these things out. But - but I do think that having a fully virtualized version, that is something that'll definitely make sense for some customers and is probably going to come down the pipe at some point.

Regina: Alright, well that looks like that's all the time that we have for questions. Thank you all for - for your great questions and that concludes Best Practices for Closed Captioning and Broadcasting. I'd like to thank you all for joining us here today and take care!

John: Thanks, guys. If you have any questions just email me johnv@eegent.com or sales@eegent.com if you have any questions about pricing or just any other technical questions we didn't get to today. We're always here to help. Shoot us an email or give us a call, we're here. Thanks guys!