EEG Video and Ai-Media are helping schools and universities reach more students with accessible content!

We hosted Back-to-School Captioning on July 29, 2021, a webinar about closed captioning and accessibility for education. Featured speakers Bill McLaughlin, CTO at EEG, and Phil Hyssong, Chief Customer Officer at Ai-Media, discussed educational trends and challenges, along with the solutions and services that can help provide a better student experience.

Back-to-School Captioning • July 29, 2021

Topics covered included:

- Best practices for achieving accessibility in education

- Closed captioning solutions for virtual and in-class learning

- Reaching students and audiences globally

- A live Q&A session

Bill and Phil discussed these solutions and services in depth:

- Ai-Live

- Lexi

- Smart Lexi

- Falcon

- AV610

- Video Remote Interpreting

- Captions & Transcripts

- Scribe

- Audio Description (Recorded Media)

- iCap Translate

- HLS World Languages with Falcon

- Localization & Translation

Transcript

Regina: Thank you all for joining us today for Back-to-School Captioning, a webinar where we'll talk through the best captioning solutions and services for schools and universities to deliver better accessible content to students. My name is Regina Vilenskaya and I'm the Director of Marketing at EEG. I will be your moderator today.

With me on this webinar are Bill McLaughlin and Phil Hyssong. Bill is the CTO at EEG and Phil the Chief Customer Officer at Ai-Media. For today's webinar, Bill and Phil will share their experiences helping schools and universities get accessible. You'll find out about common challenges educators and beyond face with providing quality content to students and we'll feature the closed captioning solutions that can help.

So I am now going to welcome Bill and Phil to kick off Back-to-School Captioning. Welcome and thank you for being part of this webinar! Could you please start off by introducing yourselves and the companies you're representing today? Bill, Let's start with you.

Bill: Thanks, Regina. For those of you who don't know, EEG has been in the closed captioning accessibility business for over 30 years. We are a technology-focused company. Our flagship product for many years has been closed caption encoders or inserters, which put captioned data into a video signal. And as a closed caption signal allow it to be picked up by caption decoders that will be in a receiving television set, AV system or, nowadays, an IP video stream. So that's put us into contact both with a lot of the world's leading broadcasters, as well as a lot of schools and universities, for applications in auditoriums, with graduations and even an individual classroom.

We have recently joined forces with Ai-Media in a corporate level, and Ai-Media brings a lot of additional experience, especially in the education domain and in the services domain. And I think the new combination company is really well equipped to serve all of the different needs that come up talking about classroom accessibility.

Regina: Thank you, Bill. And Phil?

Phil: Thank you, Regina. Yeah, this is a great opportunity. I have been in this industry for about 35 years. My hair used to be the color of Bill’s, but clearly not the case any longer. Most recently, I was the CEO of Alternative Communication Services, a rather significant player in the CART captioning industry in North America. And approximately 14 months ago, our company was acquired by Ai-Media and I'm now the Chief Customer Officer for Ai-Media and enjoying that opportunity. So we have had the opportunity of kind of being in the industry in a parallel way with EEG for the past 30 - I have for the past 30-plus years. So I always kind of joke and people say, “When did you get started in captioning?” And the reality is I kind of got started in captioning before captioning was even-

Bill: Beginning.

Phil: Yeah, it was kind of a day one kind of process for me, which has been exciting to watch how it has evolved and grown and so forth. So, excited to be here today and looking forward to sharing some of our insights and also learning from our viewers. Thanks, Regina.

Accessibility in Education

Regina: Thank you. So the first topic we are going to discuss is accessibility in education which, essentially, is what this entire webinar is about. To start, how have you seen the nature of education and learning change in the last few years? Phil, let's start with you.

Phil: Great. Thank you, Regina. Boy, the changes you could address over the past few years kind of is a discussion in and of itself. How things changed in the past 15 months is a whole other discussion on how things have evolved. But I think overall what we're seeing, Regina, is - I don't want to just tell a bunch of war stories kind of from my past in this webinar, but the reality is that when we started, accessibility and education was really designed specifically for advanced study type students. You saw services being provided to maybe the PhD candidate or someone who was in advanced study. And then over time you saw this kind of pushing down a little bit further into master’s degree programs. And then you saw it going into four-year degree programs, and now we're seeing it as low as the fourth grade we're seeing services in education. It's almost becoming a universal design aspect, right? That programs need to be made accessible, that academic experiences need to be made accessible. And so we're kind of just seeing an increased awareness and increased growth in all areas, and it's exciting. It's a wonderful opportunity.

Specifically to the pandemic era, the last 15, 18 months, we thought the industry might actually take a hard nosedive and, quite frankly, it went the other way. The pandemic was great for disability inclusion, people making their content accessible to everyone to be inclusive. That has been phenomenal, so we've seen quite a bit of growth. Bill, I'm sure you can add some more to that.

Bill: Yeah, you've really seen a mainstreaming on the AV side of video streaming, of Zoom, of opportunities to do closed captioning enabled by technology. And it doesn't so much mean anymore somebody who's going to roll in the AV cart. It's something that can be a software-driven workflow, surprisingly affordable and without really requiring a lot of equipment, infrastructure or knowledge that you wouldn't need anyway. So I think the technological bar has been going up to really do anything involving video streams and remote learning. But on the other hand that’s meant that it's met captioning where it needs to go to really provide captioning almost everywhere.

Live Captioning

Regina: Thank you. And that's a great transition to discuss live captioning. So first, what are some key points to consider when deciding what life captioning solutions to choose? Bill?

Bill: Yeah, I think there's two things to think about that I’d always recommend new customers dive into, which is how you're going to deliver the captions. Are we talking about a video stream where we want closed captions? And if so, what's the video production format? Or are we looking to put captions in a kind of commercial meeting platform, something like Zoom or Teams? Or are we looking to display them on a screen in an auditorium or classroom, or on a laptop or a tablet that the students are going to bring? So what's the plan in terms of actually showing the captions? And then what's the plan for generating the services?

Both of these things, they come down to budget, they come down to the desired look and feel and the needs of the student. And what we're technologically comfortable with from a setup and workflow perspective, which is going to be different for a large event with an AV technician compared to an individual small classroom.

Regina: And what are some unique ways you've seen schools and universities move classes to the virtual space, and what are some of the benefits you've seen of this change? Phil?

Phil: Well, we're seeing schools using recorded media. We're seeing schools be inclusive through - we hear a lot about Zoom, we hear a lot about Teams. And making that media accessible to everyone, we have found that live captioning continues to be the preferred method of accessibility, providing consistency, quality, those kinds of things that are necessary. But we're seeing live captioning kind of really be used in all sorts of environments.

I mean, I have stories I can share with you of a medical student who was in a surgical suite and the nurse across from the doctor wore an iPad on her chest and so that the captions were displaying on the nurse’s chest while the surgeon was performing surgery and able to read and understand what was being said in the entire surgical suite. So, I mean, that's a pretty cool and extreme example, but really -

Bill: Regina asked for unique!

Phil: Yeah, it really is kind of unique opportunities that are made available through technology and the virtual aspect is becoming commonplace. Back in the day, it was somebody who hauled an AV cart into a room and provided services. Now we always say as long as you have the Internet, we can meet your needs, and that typically is the case.

Bill: Thanks. Yeah, and let's look at one of the most common examples first, and here we're going to start to get into a little bit more specific EEG and Ai-Media solutions. But look, even if you're not a customer right now, I think it's useful to understand what the whole range of different products that can be offered are.

Ai-Live

Bill: And so looking at Ai-Live, basically is a browser-based platform that you can look at in a laptop, tablet, smartphone. And what's going to happen with this program is that you provide audio from the instructor to a remote captioning transcription service since, typically, getting a transcriptionist onsite, while that can be done sometimes, depending on your location especially, that can be a lot more expensive and difficult.

So primarily, we're going to be looking at remote transcription options, and that can either be with the human expert who provides steno or re-speaking services or, increasingly, you're able to use automatic captioning technologies, provided that the right preparation and vocabulary is used and the right vendor is selected.

Basically, the instructor’s audio is going to pass through either a meeting platform or an audio uplink application that we can provide onto the computer. And for the best-quality audio, especially in a lecture environment, it's good to have something like a lavalier mic, which will provide a really clear feed of what's being said to the remote captioning service, and within about five seconds, that's going to be passed through to the remote captioners and actually back down to a student using a private link.

And the Ai-Live application provides accessibility, and it also provides a basic note-taking app. The whole transcript is downloadable. You can scroll back into it, so it really has some utility really across the board, and not only for accessibility purposes, even though that can be especially important when the student has special needs.

Phil: Bill, If I can go back on that and you can - the slide is fine right here. But kind of stressing to folks, going back to what you said in your opening statements, which was kind of determining what the need is in your live captioning application. I think that that also is critical when you start looking at the classroom and Ai-Live. I'm sure some of our guests are going to immediately ask and say, “Well, what about student questions? What about conversation in the classroom?" And remote situations and lavalier microphones, there are limitations; there is no question that there can be limitations. But we work to minimize those limitations to make the educational experience a full environment for the student.

And so again, regardless of what that situation is, we look at the audience, we look at what the need is and we can kind of make a solution that meets those needs. Thanks for that.

Bill: That’s absolutely right. I mean, your choice of a microphone, your choice of an uplink program, it really will have a lot to do with the audio environment that you're recording. And it also ties in, then, to the services that you'll use.

Live Captioning Service Tiers

So we can offer a really good example of what the whole range of service tiers pretty much across the industry is in keeping with the Ai-Media one-stop shop approach. And basically, going up from what you might see as automatic untrained captioning that's often going to be included in something like a Teams meeting, really moving up from there, any third-party services are something that the Ai-Media/EEG team can offer.

So we can offer the Lexi service, which is an improved automatic captioning service that is customer self-service. Very affordable, very flexible, provides captioning on about 10 seconds notice once you log into the site to a compatible audio source. And that's going to be what you can use, basically for a student, a simple environment, low-cost solution and still get a better result through training that can be self-uploaded than a lot of out-of-the-box ASR.

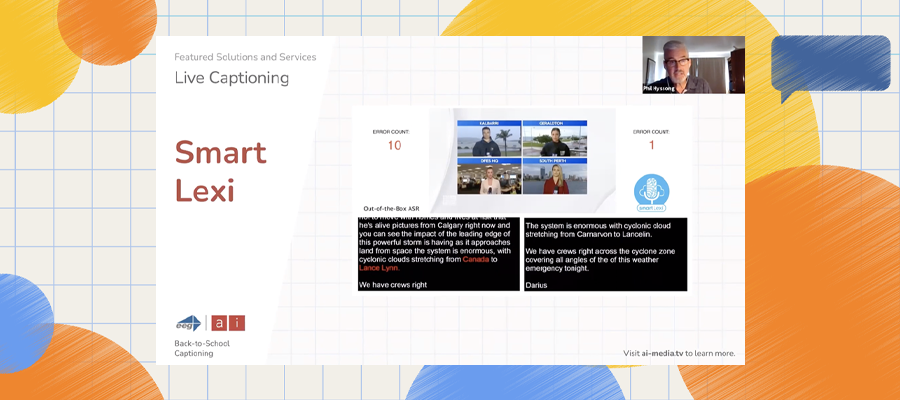

We also provide a Smart Lexi service wherein the Smart Lexi service, it uses a human curator who is also a trained captioning expert who will help create a perfect Topic Model for a certain class and will also help you with your audio setup, troubleshooting and basically making sure that the end user is going to have real success with the solution. So it moves beyond the self service-technology domain into a real partner-managed service.

Finally, for cases where you really need the highest accuracy or you have an audio environment where automatic captioning simply isn't captioning enough of what you need accurately enough, we'd recommend using the premium service with a re-speaker or a steno. That requires a trained person to do all of the captioning. It's going to be the most expensive option. It also still, even with the amazing advances in the automatic captioning technology, is generally going to give you the best premium result.

Lexi

So to go back a little bit and talk about what Lexi does and kind of look at that in more detail, Lexi is ordered through the eegcloud.tv webpage. You need an account, you need a basic plan, which can be paid self-serve with the credit card or through something like university PO. And it has 10 different language and regional, English and Spanish accent settings. You'll set it to match the language of the audio you're using.

And the typical accuracy of words on that is going to be in the mid-90% range, which often really will get the job done, especially if you have good training in there. So the customer in Lexi is actually responsible for uploading their own materials for training of the models. And what's going to be especially helpful is training proper names, training places and events in a class that discusses things that are outside of what you would think of as general conversational knowledge.

So the basic automatic captioning is going to work very, very well for something that's basic conversational knowledge. When you talk about specific things in technology or history or art or any more specialized topic where there's going to be names that–it's a good thing you're learning them in school because they're not going to be that well known to the general public–that's the kind of thing that really training is taken to really shine through on that and produce a result that really meets the student needs and goes beyond just kind of bumping over the special words in a way that's going to be, probably, not really that conducive to understanding the messages and the learning.

Phil: Bill, if I could jump in and share with our viewers, if they wanted to view Lexi and the service, we're using two products today within this webinar, both Falcon and Lexi, two EEG/Ai-Media products. And folks are able to click on the More setting at the bottom of their Zoom screen and show the subtitles and they would be able to see these products in action.

Bill: Exactly, and the webinars are captioned using Lexi and using a basic Smart Lexi model where we've curated the EEG product names into Lexi and try to do that as well as we can for the webinars. The Zoom meeting is not always the most conducive place to really see beautiful captions from a visual perspective, but the accuracy is generally pretty good.

Smart Lexi

Bill: Here's an example of a news environment but kind of showing the real difference in context that even getting a few proper names different can make between a basic out-of-the-box ASR that's built into products and a Smart Lexi example. And even though the number of words included there, the word errors make a difference. But even more than that is, you can really see that the context is dramatically affected by the work that's been done to really curate a quality model.

Phil: Bill, on that slide I want to also highlight to folks that you’ll note that on the Smart Lexi side on the right side of your screen, it's a full sentence ahead of the left side. And that is also something that the EEG/Ai-Media products are known for, is what's called a low latency, which means you speak it, and those words make it to the screen pretty quickly, and that's pretty important. So that we have what I've always called active communication as opposed to passive communication.

Active communication is where the viewer can use captions and build a level of confidence that if they respond to something, they're going to be on mark, as opposed to answering a question that was asked 10 seconds ago or 15 seconds ago becomes a major issue for embarrassment and understanding and so forth. So our viewers that use our services are able to actively participate and feel and build a level of confidence in being on the mark with their comments.

Bill: Absolutely, and that's important in entertainment and even more so in education and is often - kind of an underrated thing in captioning is how important it is for the captioning to be timely as well as just accurate.

Falcon

So the other piece of what we're doing in this webinar today is EEG's Falcon component, which is also on eegcloud.tv and is compatible not just with Lexi and not even just with Ai-Media services, but with hundreds of captioners, kind of around North America that provide educational content.

And Falcon is able to basically put captions inside various kinds of video streams, so it's compatible with meeting platforms like Zoom. It's also compatible with full video services like Facebook, YouTube, Twitch.

And when you do a video service, as opposed to a conferencing service, that can be good for a lot of types of events or special lectures, because you can do closed captions in a television style using these platforms and so, effectively, the viewer–I mean, that's true in Zoom, too–but the viewer is able to push a CC button and will get overlay captions that are optimized for the player, for the screen size, for the device they're viewing on and have that paired with higher-resolution video. So Falcon will work with an RTMP stream or can be used with an HLS (HTTP live streaming) stream and that's compatible across pretty much all of the video platforms you'd be likely to uplink to.

AV610

Bill: Finally, another iCap target that, again, is compatible with any of these forms of captioning services would be SDI encoders. And unlike what we've talked about with Falcon and Lexi, which is software only available on subscription or pay as you go in the cloud, these SDI captioning products, they take a video stream in and out. And they're actually able to overlay captions on a full HD or even 4K UHD video stream and create a professional open caption display.

So this is used in things like stadium scoreboards or on big screens in an auditorium. It can be used in a classroom, too. But basically, this an almost zero latency solution in terms of video passed through so unlike something that involves streaming video to the internet and then putting it back through a player, this is usable at a live event or in a classroom at real time, because you're not going to see a delay like you do with the streaming video of five seconds or 10 seconds or more between when the action is filmed and when it appears on screen after all of the buffering and transfers.

Video Remote Interpreting

Bill: Finally, with the video remote interpreting service, I mean, I'll let Phil speak to that in more detail, but this would be essentially ASL-style accessibility as an alternative to closed captioning.

Phil: Right. Thanks, Bill. And folks, you probably are realizing, you're thinking, Okay? What does VRI (video remote interpreting) have to do with captioning? And the reality is nothing, other than there is a kind of a shared background, probably. They're close cousins.

But video remote interpreting is used frequently in classrooms as well for students with hearing loss, and what we just wanted to bring out to you is Ai-Media/EEG provides kind of a full complement of services. And so captions do not meet the needs of everyone and there are times when we do need or you will need to have certified interpreters in a classroom or at an event. And we are able to provide that support as well, utilizing some of the very similar infrastructures that we have in place, but we are able to provide those interpreting services in a 24/7-type fashion. That's all we wanted to share on that one.

Recorded Media

Regina: Thank you. So we are going to now talk about recorded media. So obviously recording classroom content is an excellent way to improve memory retention of students. But what I want to know is how close captions come into play with helping those students succeed.

Phil: I'll start that off, Bill, and then I'll kick it over to you. Excuse me. So it really depends. Different parts of the world handle recorded content differently when it comes to the educational realm.

So Ai-Media has a main office headquarters in Sydney, Australia. In Australia, much of the educational content that is done is recorded content, so they basically take a Zoom lecture and the professor will go in and record 15 different lessons, let's say, and then they will caption those lessons so that they're fully accessible and ready to go.

The US doesn't do that as much, at least in North America that we've seen from an educational standpoint. However, in North America, we do see a lot of videos being used as part of an academic class. So back when I went to school, they'd show a film. We don't do that anymore. What we do is we show a YouTube clip or we show something off of another streaming service. Sometimes those pieces of media are already captioned. Many times they are not. So schools will send us their recorded content. Professors submit it, it gets sent to us on kind of an, actually, a no-touch basis, it's uploaded into our system. It's captioned and sent back out. and so that's kind of how we see a lot of recorded media in North America. Bill, I'll kick it over to you for some more specifics.

Bill: Absolutely, yeah.

Captions & Transcripts

Bill: And another thing to note is that when classes are live and you caption them live and then they are archived for future reference. Typically, the captions are going to stay with the recording. As long as you use something like a Falcon or an EEG SDI encoder, basically a classroom lecture ingest system is generally going to be able to preserve those closed captions, which are in a standardized format that anyone in the industry that is recording the lecture really should be able to ingest and replay.

So you don't necessarily have to redo recorded captions after doing live captions, although if you expect the content to really have long-term value and be viewed over and over, it may make sense to buy a bundle service package where you'll get fix-ups on those captions and really make them perfect for after the fact because, even using the highest-quality live caption service, if there's anything that the writer doesn't understand while they're captioning, they may need to paraphrase or simply skip over a section, whereas in a recorded environment you're going to be able to almost always just receive actual 100% results, since if there's something that the editors don't understand, they can replay the video again or they can look it up or ask a friend and you'll really get just perfect quality captioning results, like you'd expect to see in something like a television environment with a recorded program, like a sitcom, where editors have really had the opportunity to use a script and get everything right. So you have the captions from live, you have an ability to edit them.

And another thing that will be common enough with recorded media is bundles and archives. And so a lot of universities may have archives of video or audio content and be interested in doing captioning for searchability or for accessibility reasons. And basically, that's something that also Ai-Media very commonly gets involved in and can offer a number of different quality tiers for doing projects like that.

Scribe

Bill: For self-editing of captions, which is something that, especially for smaller projects, school providers might also want to do, you can use EEG’s Scribe software. Scribe is shown here. It's a Windows software. And if you're familiar with any video editing software, like Premier, for example, or even simple ones like iMovie, the interface would be pretty familiar from that, and it lets you go through the video, usually at reduced speed, since it's hard to edit or create captions while watching the video at full speed; very, very few of us can type with that kind of speed and precision and listen at the same time. But you may play the video back at something like 50% or 75%.

And meanwhile, you can create captions from scratch or you can edit captions that originally ran live but might be imperfect, or you can download a Lexi speech recognition transcript which, then again, also could be usable as is in many cases will benefit from a bit of editing. And the Scribe software makes that fairly quick to do. It will still take quite a bit of time, which is why this type of work is very often outsourced. But for smaller projects where there may be student labor that can be kind of used to caption the material, this can also be a very cost-effective option.

Audio Description

Phil: So audio description is, again, not really a captioning service, but something that we want to touch base on. Ai-Media/EEG does provide this service. And basically, if you're not familiar with audio description, it basically gives a narrative description of what's on the screen, perhaps of pertinent data. So perhaps they would say, “Phil Hyssong in a semi-darkened room with curtains pulled in the background.” That may be part of the narrative that would describe where I am, what my setting is and it would be obviously data that is relevant to the overall content. If someone were coming up behind me, the visual might state, “Scary individual coming up behind Phil to scare him.” Whatever, something to that effect. And I'm not a professional audio describer so don't judge me on that, but that kind of is the process that takes place.

I was recently - it was this past Thanksgiving, in fact, that the Macy's Day parade in New York City in the United States was audio described. And they opened the audio channel so that people could hear it and experience what it was. And so you had the commentators talking about the parade and all these activities and then you heard in the background, “A large float in the shape of a teddy bear with yellow clothes,” and they would describe what was going on in the process. Obviously this is for people with low vision or who may be blind that allows them to have equal access to the content.

One of the questions that was brought to us, actually, as we were preparing for this webinar was, Does audio description and captioning compete? And in some ways it does compete. There are multiple channels within video, television. So you might have English captions, you might have audio description, you might have Spanish captions. It's kind of a selection process of what the viewer needs and what the viewer wants. Oftentimes we will get people saying, “Well, captions are conflicting with the audio.” Well, oftentimes those are two very different users, right? You have people with hearing loss who are using the captions who can't hear the audio and you have people who are listening to the audio who would not typically access the captions. Same with audio description.

So in a simple way, yes, they do compete, but in a user way, it really comes down to the user preference of what they want to listen to or what they want to see. And that then gives them the necessary information. Bill, is there more that you'd like to add?

Bill: No, I mean, it's certainly something for a different audience. I mean, aside from perhaps competing for our budget and attention in some cases, I think the services are complementary and a good disability strategy. Overall, I should include whether there's a strategy for both of these issues.

Multilingual Captioning

Regina: So we are then going to move to the last topic of this event, which is multilingual captioning. It seems that talk of multilingual captioning is seeing a steady increase every single year. And so how are you seeing the need for educational content in multiple languages play out? Phil?

Phil: Thanks, Regina. I'll start this off and quickly kick over the technical aspects to you, Bill. But I'll tell you what we're seeing, Regina. The world is getting smaller as technology advances. We're just seeing the value in being able to provide multiple levels of language to folks. And so we have really spent a lot of time and money in developing a kind of multilingual captioning program so that we can take in English and send it out in any number of languages.

And we have found an effective way. We can’t share exactly how we do it because it's a bit of a trade secret, but we have developed ways of focusing the language so that when we do utilize machine translation–and that's a fair item to share with folks, is that it is machine translation. But we have an extremely high accuracy rate on that translated language, and we have taken it in front of many native speakers who have said, “This is really good.” Because oftentimes when you talk with native speakers, and you bring up machine translation, they shake their heads and say, “This is not going to be effective for me.” But we have developed a way to make it effective.

And we are seeing in the educational realm, as well as in the business, or what we call live enterprise, realm where we are seeing projects that originate in English and may be delivered and up to 12 simultaneous languages so that people are able to simply click on the language, their native language and be able to fully participate, so it's a pretty exciting time as it continues to develop. Bill?

Bill: Yeah. Yeah.

iCap Translate

Bill: And, I mean, in education that probably doesn't speak to the majority of classes, but places where that can be very interesting is if there is a kind of distance learning library that's up that maybe is intended for a worldwide public audience associated with the university or another use case we've seen a number of times is for commencements and that type of ceremony where you may have a desire for, get more participation from parents of international students and families that might be watching a stream, for example, and be looking for translation. And through iCap and through any of the EEG products, that can be machine translation or full human translation as long as you can source the supplier. And for some languages that's easy, for some languages that's a little more challenging. But if you have an overall strategy to cover as many languages as possible, you can cover the main ones that are relatively easy to source an interpreter for and, relatively, they can take up a little bit higher percentage of the budget. You can source that with human interpreters while doing some other languages with machine translation.

HLS World Languages with Falcon

Bill: We're especially proud of the fact that when you use our Falcon product for streaming, you're actually going to be able to do closed captioning out of Falcon with our HTTP live stream. That will be able to work in pretty much any world language because - I don't know if some people in the audience encountered this problem before, but it can be really, really tough to get captions in languages that aren't European character set languages through a lot of streaming workflows.

The traditional way of passing embedded captions and streaming workflows only really supports European language characters. It's a fairly old standard. But that's kind of still what's popular around the web today. But we've been able to develop a solution that actually will display live captions in almost any character set, which supports then Asian, Cyrillic languages and some other languages that are popular and that a lot of customers ask about but with other workflows, they can have a lot of trouble.

Localization & Translation

Bill: And Phil, then, do you want to speak to any of these, some of the Ai services in document translation, or we've really been talking a lot about live multi-language but in recorded as well?

Bill: Sure, Bill. Really, again, Ai-Media/EEG, we're not necessarily in the document translation business, if you will, but what we have found is that because of our extensive network globally and because we are able to provide captioning that supports any language on programming that we can also provide support materials as necessary. So we utilize many of our same translation folks, many of our same native speakers in the language that you're working in.

We have many different kinds of avenues and a really extensive network of folks so that we can give our clients that one-stop shop. That's ultimately what we want to be able to do, is that when a customer comes to us and says, “Hey, I need to do this,” that we are able to provide them that level of support from beginning to end and that then they're able to kind of walk out the door with a finished packaged product that meets their needs. And they don't have to run down the street to the next guy and figure out how to get the next piece of the project completed. So that really talks to our overall service of text translation, as well as document translation, and so forth.

Live Q&A

Regina: Thank you. So that brings us to the Q&A session of this webinar where Bill and Phil will be answering your questions. So if you have any questions and you have not done so yet, please go ahead and enter them into the Q&A tool at the bottom of your Zoom window.

So one of the questions that we received while preparing for this webinar was, How can large universities provide live captioning affordably and at scale?

Bill: Yeah, I mean, I hope seeing the webinar gives you a little bit of visibility into that question. I mean, scale can have different meanings in different cases. Certainly being a large university and saying that you wanted to do every single class and have a captioning solution, I'm sure that could add up to tens of thousands of hours per semester, hundreds of thousands of hours per semester or more. I would guess that at that type of scale, you would probably be looking at a tiered approach, where some large classes or classes where there was a specific request or need, you might look into more expensive forms of captioning like human services, while for the bulk of the material, you would probably go for something that was automated because the price difference could really be in order of magnitude there between paying something in the range of $10 an hour for an automatic solution to paying something inthe range of $100 per hour for a top-tier premium solutions.

So depending on the scale, you might find that it simply wouldn't be feasible to do everything at a premium level but a lot of these solutions, I think, are well suited to a kind of a pick-and-choose approach where when the need exists, you can use the more premium solution and you can fall back that's a lot more affordable.

Phil: Go ahead, Regina. Go ahead, Regina. That’s good. Bill did it.

Regina: So what do you consider the best remote platform for teachers and students who are in the classroom but when captions are being handled remotely? And a follow-up to this question was whether Zoom would be the preferred platform and, if so, what alternatives there are? So what's the best platform out there and what are alternatives to the platform?

Bill: Yeah, I think it depends on what you're looking to do. I mean, if you imagine a class that was basically an in-person class, and the only thing you were seeking was captioning, I would tend to recommend a browser-based platform, and that would be Ai-Live or in the EEG family. I think these products are merging a bit now, but in the EEG family, that would be the CaptionCast product. And these are browser-based, text display products that kind of provide you with a live transcript and a history of what's been said, and they'll basically work on any kind of device you want to throw at it: a laptop, a tablet, a smartphone. And then you just need to uplink the audio to the captioning source and that can be done through something like Zoom if you want to use a consumer platform.

There's a free Windows program for uplinking audio for iCap that could also be used with a microphone. At that point, the Zoom meeting is really just for the captioners to hear it in that case, so that's not really where people are going to view the class. Clearly, when you're looking at doing a class remotely, primarily you could be going through something like Zoom or Teams if your university uses those, or you may have something like Blackboard that's kind of a full-service classroom digital experience.

Regina: Juliette asks, What services have been proven to work for connecting two decoders over non-traditional phone connections, like without a standard copper landline?

Bill: So the encoder, I guess, is a dial-up encoder. So a lot of those are still out there. The world has mostly moved away from that style of analog phone connection and, unfortunately, because of that, the dial-up encoders have become less and less reliable, because even when you get them connected in, they're traveling over digital telephone networks that are primarily used now to deliver Internet services and the modem services, they drop a lot. That's a real pain point for the live captioning community.

So where possible, I would certainly recommend upgrading to an encoder, like an iCap encoder, that's capable of actually communicating natively over IP. It's more secure and it's going to be more reliable and work better. But when you need to use those dial-up encoders, what you need is one of these interfaces to a VoIP system, like if you have an Internet phone, you can get one of these PDX systems that, essentially, an analog phone jack comes out the other side and it converts your call into VoIP using something like a SIP provider. So if you're a university with a VoIP phone set, you can get a converter that makes that soft phone into an analog line for modem caption encoders but your results with that are probably going to vary, frankly, so it's something that is getting a little hard to support.

Regina: Next question is, Can Lexi and smart Lexi be used by multiple students simultaneously? (i.e. Does a private link need to be generated for each person using the service, or can one link be generated for all attendees to have access to captioning?)

Bill: Yes. With Ai-Live all of the consumers can share a link within the room. So if there were multiple rooms going on simultaneously, you would simply be billed extra and you would run parallel links. But for multiple users within a single classroom, there's actually not even any extra charge for that. They can just share the same link and the captioning only needs to be generated once.

Phil: Yeah, I just want to add on that, Regina and Bill, that it really is also dependent on the school and the rules of the school. We have the capabilities of doing that, but there are some institutions that require certain levels of privacy. And if that is the case, then we can make those situations secure and private, and so we kind of have different levels that we can provide within the school. But I just want our viewers to understand that we can meet that need whatever it might be.

Bill: Well, the link can be open or the link can be authenticated

Phil: Right.

Bill: But if the link - you never have to pay for the captioning service twice if that, in fact, is the question.

Phil: Right.

Regina: So somebody had commented saying government black sites can't use cloud-based anything. Now, schools and universities also often have very rigid policies in place as far as security goes. So the question is, Do you have a captioning system that is on-prem to provide automatic captions?

Bill: Yes, for that I would recommend the EEG Lexi Local system, which will do up to 10 simultaneous streams of automatic captioning. And it's a server that we ship to you. It connects to caption encoders that are onsite through your local networking.

You can also use this with human services. But if you do, those human services will have to be onsite services, or if they're remote they would need to be connected through an approved corporate VPN that you set up. So basically the difference between that and normal iCap is EEG does not provide hosting or any type of connectivity support for this. It's all an on-prem system in the local network. But yes, please look at the Lexi Local product for that.

Regina: Thank you. Steve asks, Can you walk us through how you might integrate with the media server that houses pre-recorded media and whether you have integrations with YouTube, Kaltura, Panopto, etc.?

Bill: At least some of those, yes. Basically with the way those integrations will work is that you may be able to provide links to your programming or your account and that data can be automatically ingested for recorded captioning and then the caption data can be returned. So I mean, I don't know if Phil wants to speak to - might be better equipped to speak to exactly which recorded platforms of those it's integrated, too, but we do have integrations to a number of those we would be interested to build integrations to others if you're kind of contacting our support team and have some needs to lay out.

But basically, the idea of the software integration then is just to make it so that it's a little bit simpler for the customer to provide the videos. And to add the captions and in a very traditional, nonintegrated workflow, the customer may be used to needing to send videos through FTP and then receive the caption files back through FTP or email, and they need to self-associate them with the video in their media asset management or other video libraries. And the integrations just cut out the number of those steps that are required so you can provide the correct security credentials and kind of simply have that be something where the customer kind of can say which videos they need and basically write a check and see it happen.

Phil: Bill, I agree with what you've shared. I just want to add that I would encourage our viewers that if you have a platform that you would like to see us integrated with, let us know that. Because oftentimes what we do is, like, I know we are in dialogue with Panopto and Kaltura right now in making those things happen.

But what's really beneficial to us is when we can go to them and say, “Hey, Phil Hyssong from the University of Arizona would really like to see this happen and they have five students,” and so forth. Because many of the platforms - I don't want to say that they’re money-driven, but it's a return on investment and it's costly to build some of these interfaces, and so we need to be able to share with them and say, “Hey, look, you have a number of customers who are using your platform that would like additional access to it.” And that gives us a little bit more leverage to talk with them.

So if you have some particular platforms that you're interested in seeing us integrate with, please let us know that and we can take that information forward. Again, I can't promise you anything but -

Bill: Yeah, with these live streaming platforms and recorded in general, you kind of can have a division between platforms that publish a public API and anybody can use that API and with the correct authorization from the customer to access their content, of course. But they'll have an open API, and then there are platforms that are a bit more of a walled garden approach where, essentially, your platform may sign partners that have other integrations that you might be interested in. But there's like a bilateral business step essentially as opposed to an open API, but we can help you navigate through any of that, really.

Regina: Do you have a way to support captioning in Microsoft teams?

Phil: I can answer that. The simple answer is no. The better answer is we are in dialogue with Microsoft right now to make that happen. And they're in the process of testing our services to allow us to have that API Bill's been talking about–that interface–that will allow us to be, if you will, a preferred provider. So we are in dialogue with them right now.

Bill: And Teams provides auto-captioning out of the box which is okay, but certainly there's going to be a lot of cases where you have a need for something more than that. And so I think that's why that integration is really valuable.

Regina: And so we have time for just one more question. Somebody had said that they were curious about how you train automatic captioning solutions for a podcast situation where guests are changing every day. So related to other cases within education, if there are different types of speakers from different regions or teachers speaking about different subjects that would require different custom models, how do we make sure that those automatic captioning solutions are trained in order to keep up with the base accuracy?

Bill: Yeah, so the Lexi-driven systems are what's called speaker-independent technologies, so they are not relying on being trained for a particular speaker voice. So that's something that is not so much of a challenge as using something like Dragon, for example, which is a speaker-dependent speech recognition system that relies on your voice. And speaker-dependent in theory can be more accurate but requires a lot more training first, so it's not usually used for something like a media application.

When you have different guests, names and topics, that definitely increases the amount of training in terms of vocabulary and words and names that you would need to do to get a totally optimal result from time to time. It's really just a question, though, of how far into that you're gonna need to dig and what you're going to need to do. Like to program a specific day's guest into the system, you can pretty much do that in less than five minutes and a couple of things about the guest and their affiliations or the name of their book, whatever it may be. So a little bit of quick training like that is realistic for self-serve Lexi. It's certainly within the scope of what you can ask the Ai team to do with Smart Lexi. When you have some things like - most content that's live, right, doesn't come with a complete script and you may not know everything that's going to come up.

And so it's important to understand that, in general, the captioning is probably, we would generally all be ecstatic if our captioning was 99% accurate. So if that means that one name is going to be missed because you didn't know someone who's going to talk about it ahead of time, in context that's live captioning and that's why when you're doing something for re-air as you typically would with a podcast, the most sensible thing to do is to get it as well as you can for live and then to do a fix-up to 100% after the event is complete. Getting it to a true 100% live even using human premium is not really going to be completely practical.

Regina: Alright, well that brings us to the end of the webinar. I would like to thank all of the attendees for joining us today. And a huge thank you goes to Bill and Phil for leading this webinar, and thank you to Wes Long for helping behind the scenes with delivering the live captions.

So if you have any questions about EEG, Ai-Media or any of the topics that we discussed today, please feel free to reach out to us. Thank you all again, and we hope to see you at our next webinar.

Phil: Thanks so much.

Bill: And yeah, I mean, additional questions, things we didn't get to, please feel free to email us. We'd love to talk to you.

Phil: Thank you all.